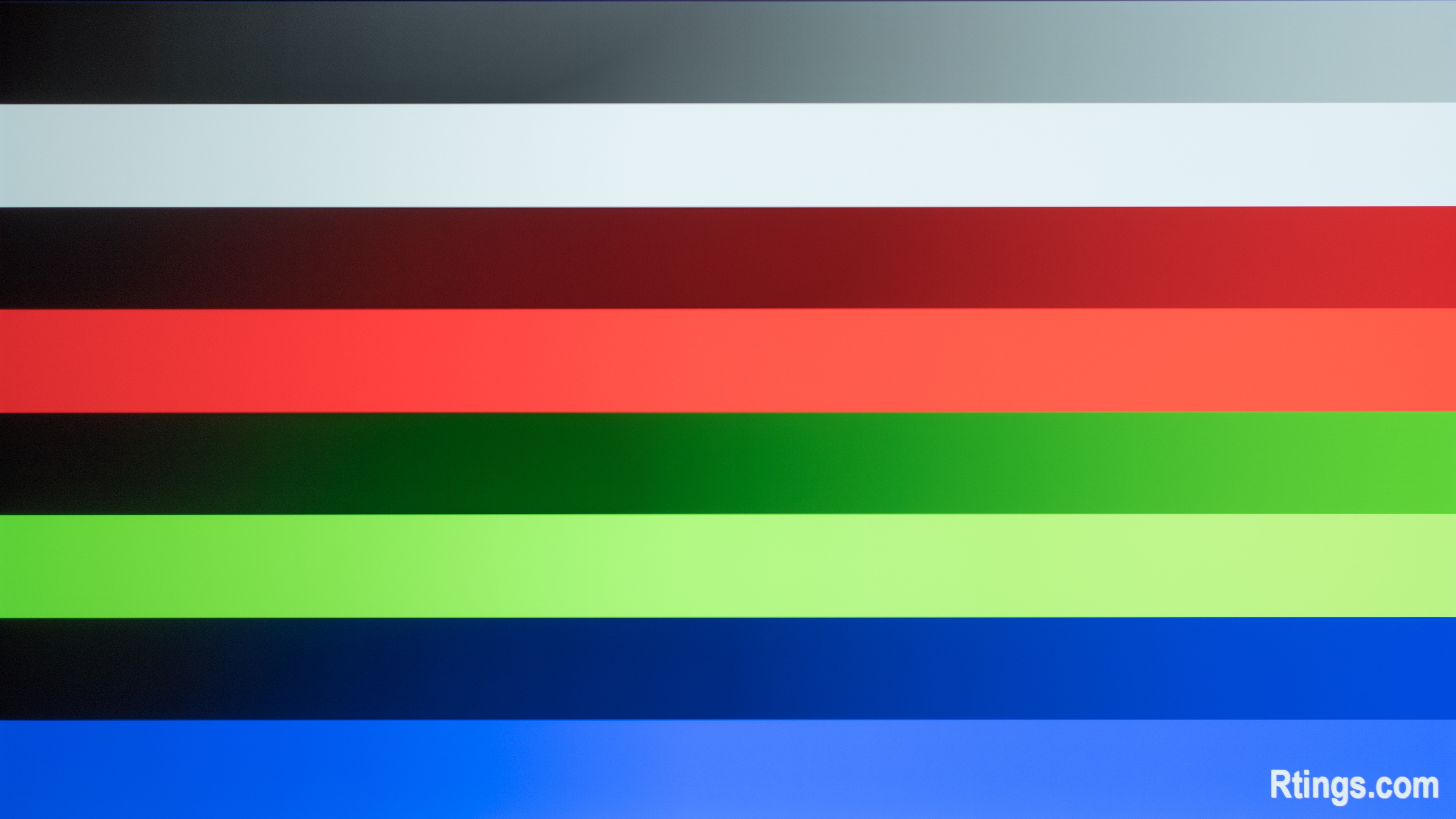

- 12.5% 100% Black to 50% Gray

- 12.5% 50% Gray to 100% White

- 12.5% 100% Black to 50% Red

- 12.5% 50% Red to 100% Red

- 12.5% 100% Black to 50% Green

- 12.5% 50% Green to 100% Green

- 12.5% 100% Black to 50% Blue

- 12.5% 50% Blue to 100% Blue

Color gradients are when there are shades of similar colors displayed on the screen. If you're watching a movie with a sunset, the bottom of the sky will gradually go from yellow to orange and eventually become dark blue at the top. In each section, there are shades of similar colors, and we want to know how well the TV transitions between the shades. If you watch a sunset with your own eyes, you won't see any lines or banding, but you may see this on TVs. Having a TV with good gradient handling is important for watching HDR content because HDR requires a wider range of colors and more color depth.

To test for gradient handling, we use a test video that transitions four colors from their darkest to most saturated shades, and a colorimeter measures the standard deviation. We take a photo of a test pattern to show what the gradients look like. We also check to see the TV's color depth and whether it accepts a 10-bit or 8-bit signal.

Test results

When It Matters

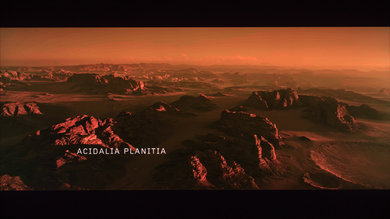

Good gradient handling is important for producing fine details, particularly in scenes with many different shades, like in HDR. Since detailed color is meant to be one of the benefits of HDR video, the results of this test are important for people interested in that kind of media. You can spot the differences between good and bad gradient handling with the photos above. On the left, there's no banding in the sky, and you see the different shades of orange, but on the right, you see obvious banding, and the display doesn't transition well between the shades. While this isn't the most important aspect of a TV for most people, it's beneficial to providing a realistic HDR viewing experience.

Our Tests

There are three different parts to testing for gradient handling: the photo, the standard deviation, and the color depth. We use a photo taken to measure the standard deviation of the colors to the test pattern to objectively measure the gradient handling. The color depth tells us which type of signal it accepts, 10-bit or 8-bit.

Picture

Our picture test captures the appearance of gradients on a TV’s screen. This is to give you an idea of how well the TV can display shades of similar color, with worse reproduction taking the form of bands of color in the image. Because this photo's appearance is limited by the color capabilities of your computer, screen, browser, and even the type of file used to save the image, banding that is noticeable in person may not be as apparent in the image. You may also see banding in the photo caused by your display, but it's not actually there on the TV.

Above, you can compare what excellent and poor gradient handling looks like. If you look closely, you can see more obvious banding in the right image, particularly in the green gradients.

For this test, we display the test pattern on the TV from a PC using an HDMI connection, and our testers write subjective notes on what it looks like and what score they would expect it to get. They leave the image on the display overnight, so the TV warms up, and in the morning, the tester uses a camera to take a photo of the pattern on the TV. We use a 1/15 second shutter speed with a 4.0 F-stop and ISO set to 200, and save the image file as a TIFF file because JPG and PNG don't support 10-bit color depth well.

Scoring

The final scoring is based on the standard deviations of the four different colors: red, blue, green, and gray. We connect a Dell Alienware laptop with a GTX 1050 Ti graphics card to the TV. We place a Colorimetry Research CR-100 colorimeter in front of the TV, and we play a video that transitions from the darkest shade of every color to the most saturated. The Octave software reads these measurements from the colorimeter and calculates the standard deviation of each color. Standard deviation represents how close the image comes to the original test pattern; a lower standard deviation is better. Each color is calculated as an average of all the standard deviations, and anything less than 0.12 is good.

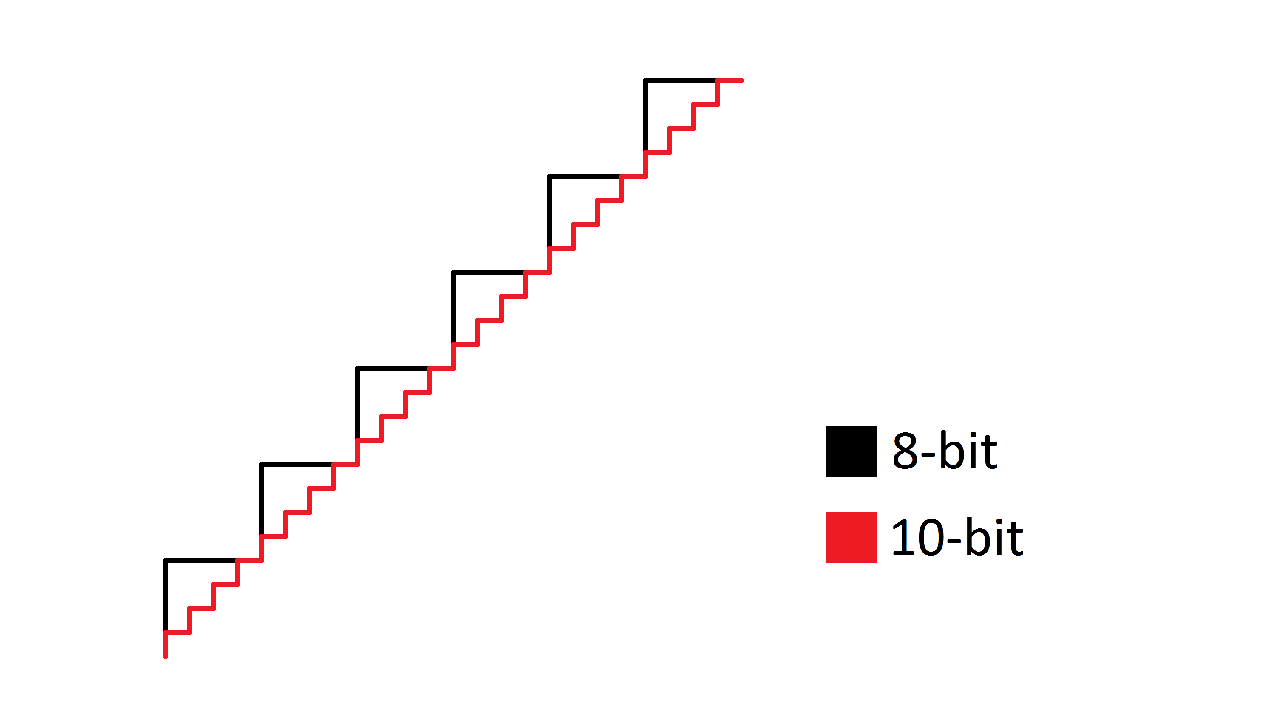

Color Depth

Color depth is the number of bits of information used to tell a pixel which color to display. 10-bit color depth means a TV uses 10 bits for all three subpixels of each pixel (red, green, and blue), compared to the standard 8 bits. This allows 10-bit to specifically display many more colors: 1.07 billion versus 16.7 million on 8-bit TVs. Nearly every 4k TV now accepts 10-bit signals, and the only 8-bit TVs we've tested under our latest test bench are 720p or 1080p displays.

10-bit can capture more nuance in the colors being displayed because there's less of a "leap" from unique color to unique color. However, having a display that accepts a 10-bit signal is only beneficial if you're watching 10-bit HDR content; otherwise, you're limited to 8-bit content in video games or from your PC. We aren't actually testing to see whether or not the panel is 10-bit or 8-bit; we just want to know what type of signal it accepts. Also, accepting a 10-bit signal doesn't mean it has good picture quality because if it has a narrow color gamut, it won't display colors as it should.

We verify color depth while performing our picture test. Using the NVIDIA 'High Dynamic Range Display SDK' program, while outputting a 1080p @ 60Hz @ 12-bit resolution, we display our 16-bit gradient test image, analyze the displayed image, and look for any sign of 8-bit banding. If we don't see any 8-bit banding, it means the TV supports 10-bit color. We don't differentiate between native 10-bit color and 8-bit color + dithering because we score the end result of how smooth the gradient is. Even though most current TVs max out at 10-bit color, sending a 12-bit signal helps to allow processing (like white balance adjustments) to be enabled without adding banding.

Additional Information

Banding in Gradients

Two things can happen with banding: either two colors that are supposed to look the same look very different, or two colors that are supposed to look different end up appearing the same. This is because the TV can't display the necessary color, so it groups it with another. This is why banding appears on the screen.

Therefore, if you see lots of banding in a gradient, it means one of three things:

- The signal isn’t carrying enough bits to differentiate lots of similar colors.

- The screen’s bit depth is not high enough to follow the detailed instructions of a high-bit signal.

- The TV’s processing is introducing color banding.

With a high bit-depth signal played on a TV that supports it, more information is used to determine which colors are displayed. This allows the TV to differentiate between similar colors more easily and thereby minimize banding.

As mentioned above, the processor affects the banding. Sony TVs are known for their exceptional gradient handling (at the time of writing, 7 of our 8 best TVs for gradient handling are from Sony), mainly because their processor is better at gradient handling than the competition. Also, higher-end TVs tend to have better gradient handling because they have superior processing than lower-end options.

Dithering

There are two kinds of dithering, both of which can simulate the reproduction of colors:

- Spatial dithering. This is done by sticking two different colors next to each other. At a normal viewing distance, those two colors will appear to mix, making us see the desired target color. This technique is often used both in print and in movies.

- Temporal dithering. Also called Frame Rate Control (FRC). Instead of sticking two similar colors next to each other, a pixel will quickly flash between two different colors, thus making it look to observers like it is displaying the averaged color.

With good dithering, the result can look very much like higher bit-depth, and many TVs use this process to smooth out gradients onscreen. 8-bit TVs can use dithering to generate a picture that looks very much like it has a 10-bit color depth. However, it's not common for modern 4k TVs to use this technique as we see it more often on monitors.

Different Testing Between Monitors and TVs

We also test for gradient handling on monitors, but we test it differently than on TVs. We use the same test pattern, but instead of objectively measuring it, we subjectively look at it and assign a score. Because of this, you can't compare the gradient score between the two products.

Learn more about gradient handling on monitors

Does Resolution have an Effect?

The resolution doesn't affect the gradient handling. Resolution defines the number of pixels, while color depth is the number of colors each pixel displays, so having more pixels doesn't change anything. An 8k TV won't inherently have better gradient handling than a 4k TV just because it has a higher resolution.

How to get the best results

The TV needs to be able to accept true 10-bit signals from the source for a more detailed color. For minimal banding with TVs, you must watch a 10-bit media source on a 10-bit TV. When watching HDR media from an external device, like a UHD Blu-ray player, you should also make sure that the enhanced signal format setting on the TV is enabled for the input in question. Leaving this disabled may result in banding.

A TV with a higher HDMI bandwidth allows for more color depth. For example, an HDMI 2.0 TV can only achieve 10-bit color with a 60Hz refresh rate and chroma 4:2:0 on a 4k signal. Instead, an HDMI 2.1 TV can reach a maximum of 12 bits with a 4k @ 120Hz signal and chroma 4:4:4, so you get more details in this type of signal. However, you can only achieve this signal with a full 48 Gbps bandwidth, which some HDMI 2.1 TVs don't even have.

If you have met these steps and still see banding, try disabling any processing features that are enabled. Things like ‘Dynamic Contrast’ and white calibration settings can result in banding in the image. If you still see banding after trying different settings, it means the TV has gradient handling issues and there's not much you can do about it.

Gradient enhancement settings

Most modern TVs have settings to specifically improve gradient handling. Enabling these can help improve the gradients, but it also comes at the cost of losing fine details. You can see the settings names below:

- Hisense: Digital Noise Reduction and Noise Reduction

- LG: Smooth Gradation

- Samsung: Noise Reduction

- Sony: Smooth Gradation

- TCL: Noise Reduction

- Vizio: Contour Smoothing

Other Notes

- Although 10-bit content was rare a few years ago, it's more common with HDR content. However, most non-4k content and signals from PCs are still 8-bit.

- Some TVs will exhibit a lot of banding when the color settings are calibrated incorrectly. If you have changed the white balance or color settings and find your TV has banding, try resetting those settings to defaults and see if that solves the problem.

Conclusion

Gradient handling defines how well a TV displays shades of similar colors. A TV with good gradient handling can display an image with shades of different colors well, and you don't see any banding. It's important to have good gradient handling for HDR content that displays more colors than non-HDR content. We objectively measure the gradient handling, and we check to see the TV's color depth, which is how many different colors a TV can display.

Often playing with a TV's settings can help it achieve the best gradient handling possible, but some lower-end TVs are bad at it, and you'll always see banding no matter the settings you use.