We've tested over 215 soundbars since September 2019, continuously refining our approach across four generations of testing methodologies to provide our readers with accurate and thorough reviews. Our approach stands in stark contrast to what you might find elsewhere—we don't simply receive a review sample in the mail, skim a media spec sheet, and immediately release a video. Instead, our reviews are the result of coordinated efforts between several specialized teams, beginning with the development of our rigorous test protocols, continuing through data-based product selection, professional photography, comprehensive testing, detailed writing, and video production. By the time we publish a review, we've taken hundreds of measurements and written thousands of words. In the sections below, we'll walk you through our review process from start to finish, giving you a more detailed look at how we go from data to diction.

Philosophy

At the heart of everything we do are two principles prominently displayed on our website's landing page:

Find The Best Product For Your Needs

We purchase our own products and put them under the same test bench so you can easily compare the results. No cherry-picked units sent by brands. Only real tests.

These aren't just marketing slogans—they're the guiding philosophies that shape the direction of every aspect of our review process.

Product Selection and Purchase

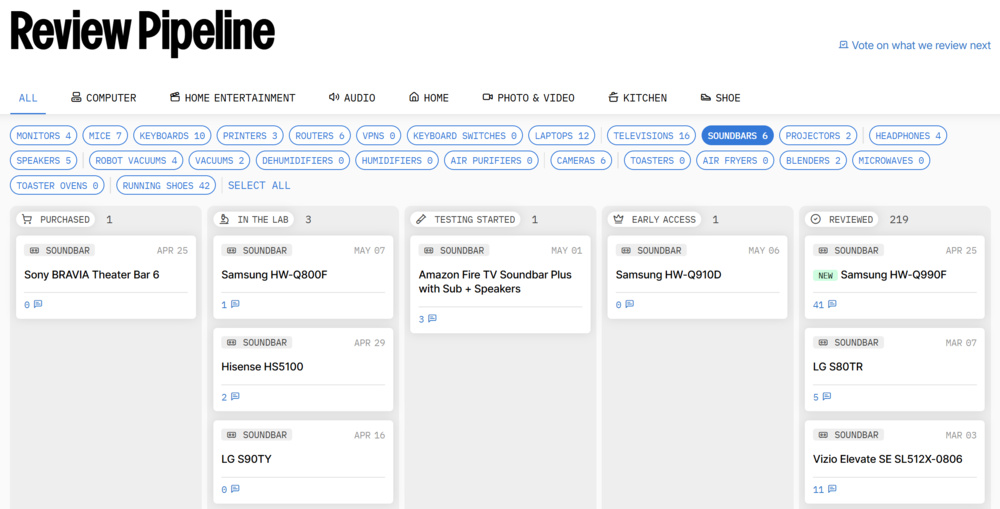

Product selection marks one of the first steps in our review process. As with our other product categories, the number of soundbars launched each year can surpass our capacity to review new products while keeping previous releases up to date with our latest testing protocols. This reality necessitates a strategic approach to choosing which soundbars to evaluate.

We carefully identify the soundbars that interest our community, prioritizing them based on search trends and other in-house metrics that help us understand which products are more desired. While data drives most decisions in product selection, we make exceptions based on the insights of our team of audio experts, who might spot an innovative feature or notable trends worth exploring. Our community polls, where you can vote for the next soundbar we review, serve as an additional layer of confirmation, helping us validate our direction and ensuring fewer products slip through the cracks.

Throughout the selection phase, we're committed to replicating the consumer experience—we purchase from online retailers or local stores like you would. We do this to avoid review units that won't reflect your actual experience with the product. By purchasing our test units anonymously through retail channels, we ensure the soundbars we evaluate are identical to the ones you'll bring home.

Standardized Tests

We put each soundbar through its paces on a standardized test suite, forming the foundation of our reviews and enabling apples-to-apples comparisons between products. This consistency between product evaluations powers tools like our comparison tool and table tool, allowing you to evaluate products in parallel or filter through our results database based on your preferences.

With our deeply data-focused approach, accuracy is critical, which is why our review process includes multiple layers of validation. We cross-check results and identify anomalies to maintain a high level of quality. But we don't stop there. We're always looking to improve our test benches—whether that means revamping a test's methodology, introducing new ones to reflect technological shifts, or responding to feedback from the community with adjustments. You can stay up to date with changes we make to our soundbar testing through our changelogs.

That said, these changes can break compatibility with products on older test benches, so we carefully consider products in our existing inventory to determine which models should be updated with each new test bench. Not every product makes the cut, so we prioritize products that are widely available or serve as benchmarks for performance.

We also recognize the limitations of standards when reviewing products; not every feature or use case is captured by predefined metrics. That's why we often go outside the methodology—adapting our approach when we need to dig deeper and explore functionality to match what you'll experience if you purchase the product.

Preparation

Once a soundbar arrives and is unpacked by our inventory team, one of our in-house professional photographers runs through a standardized gamut of photographs. Images are typically mapped to match a specific test, ensuring that even the photographed aspects of different soundbars are comparable. That said, we aren't afraid to step outside the norm when necessary, especially if a soundbar includes additional ports or design flourishes that warrant a closer look.

After photography is complete, the soundbar is handed off to a single tester who is responsible for the product for the duration of the testing process. Assigning the product to a single person helps ensure a deep understanding of the soundbar and allows the tester to log observations during regular use.

Testing Process

Before diving into more detail, it helps to understand how we structure our testing process. While there isn't a hard and fast order for soundbar testing, we typically start by positioning the soundbar, subwoofer, and satellites following the included manufacturer placement guide (when applicable) in our dedicated soundbar testing room. The setup process also involves initial listening, exploration of menus and processing settings, and running through different types of content to develop a sense of the soundbar's behavior in regular use. From there, we move to structured testing, which is divided into four categories, some of which involve specialized equipment and controlled testing conditions.

| Design | Sound | Connectivity | Additional Features |

|---|---|---|---|

| Style - Bar | Stereo Frequency Response | Inputs/Outputs - Bar | Interface |

| Style - Subwoofer | Stereo Frequency Response With Preliminary Calibration | Audio Format Support: ARC/eARC | Bar Controls |

| Style - Satellites | Stereo Soundstage | Audio Format Support: HDMI In | Remote |

| Dimensions - Bar | Stereo Dynamics | Audio Format Support: Optical | Voice Assistants Support |

| Dimensions - Subwoofer | Center | Audio Latency: ARC | |

| Dimensions - Satellites | Surround 5.1 | Audio Latency: HDMI In | |

| Mounting | Height (Atmos) | Audio Latency: Optical | |

| Build Quality | Sound Enhancement Features | Video Passthrough to TV | |

| In The Box | Wireless Playback |

You can also monitor the review process for products via our status tracker on the product's dedicated discussion page.

Design

The design section provides a straightforward overview of the soundbar's physical characteristics, including its dimensions, styling, and overall footprint. We also evaluate any discrete components, such as subwoofers or satellite speakers, to give you the full picture of the product. Beyond looks, we assess mounting options and subjectively score the build quality of the overall product based on factors like materials, construction, and tolerances.

Sound

As you'd expect from the product category's name, sound is the centerpiece of any soundbar review; our testing methodology aims to evaluate audio performance in a way that reflects how you'll experience the soundbar in your home. We conduct our measurements in a 20' x 16' x 9.5' room with a single couch and minimal acoustic treatment. This in-situ setup helps us capture how the soundbar performs in a realistic living space.

To assess performance across different listening environments, we measure the sonic characteristics of the soundbar from three fixed distances using a calibrated microphone array composed of multiple Dayton Audio EMM-6 microphones and a single Earthworks M23. During testing, we turn off all sound enhancements and processing features to create a level playing field, with room correction being the sole exception. Under these test conditions, we evaluate the performance of individual channels for the soundbar and its components (i.e., Stereo, Center, Surround 5.1, and Height(Atmos)). However, our Height(Atmos) test scoring is a subjective assessment to adequately account for the spatial qualities of the additional channel. Similarly, stereo soundstage scoring is evaluated subjectively, though we use a binaural microphone rig to record interactions between the left and right channels.

In addition to raw acoustic performance, we document the presence and effectiveness of sound enhancement features like virtual surround modes and voice enhancement tools. Because these features typically alter the soundbar's output and can influence our measurements, we test them separately to understand how well they enhance the real-world listening experience. Some features, like Samsung's Q-Symphony—found on models such as the HW-Q990F—fall outside the scope of our typical testing, as they require integration with specific Samsung TVs to enable those TVs to function as additional speaker channels. While we don't formally test these setups, we still highlight them in our reviews to help users understand the potential benefits within compatible ecosystems.

Connectivity

|

Samsung HW-Q990F eARC/ARC audio/visual desync with our testing TV. |

Samsung HW-Q990F HDMI In audio/visual desync with our testing TV. |

Samsung HW-Q990F Optical audio/visual desync with our testing TV. |

No matter how impressive a soundbar sounds, its performance won't matter if it doesn't work with your setup. This section details available physical inputs and assesses how well they perform. We thoroughly evaluate audio format support and audio latency for HDMI (ARC/eARC passthrough and HDMI In) and Optical using a combination of a demo disc, test files, and a Murideo Seven-G signal generator. This information helps determine how reliably the soundbar handles different audio formats like Dolby Atmos and DTS:X, and whether you'll notice audio/visual desync while using consoles. We also assess format, refresh rate, and resolution video passthrough to the TV and wireless playback.

Additional Features

Finally, we look at features that don't directly impact the soundbar's score but can affect usability. This includes the design and responsiveness of the remote, the layout and functionality of onboard controls, and information available on the user interface. We also check for voice assistant integration like Amazon Alexa and Google Assistant.

Validation

Before publishing official results, our soundbar reviews undergo the first of two peer-review phases, involving an additional tester and the assigned writer. While this stage may be publicly invisible, it's an essential quality control step that helps ensure our measurements are accurate.

Both team members independently scrutinize the test results, flagging any unexpected results or anomalies that don't align with what we'd typically expect. We meticulously cross-check our findings with user reports, similarly positioned products, and reported manufacturer specifications, while also identifying leads that may provide deeper insights into specific performance aspects. Sometimes the process results in straightforward confirmation; other times, it prompts further investigation beyond standard testing.

Once both parties are confident in the results, we publish the review in early access, making numerical findings available to our insiders.

Reviews

With the results finalized, the focus shifts to writing the full review. Writers take the various test results and shape them into a coherent narrative. Beyond summarizing findings, the text elaborates on features and measurements that exist outside our methodology or scoring. We also use small blurbs that provide market context and usage information for general users who want a quick summary of performance.

Due to the personal and often subjective nature of audio, we go beyond the raw data when crafting our reviews. To help ground the writing in real-world experience, our writers also spend time with new and popular soundbars in our database.

After the draft is written, the review goes through the second and final round of peer review. An additional writer reviews the text for clarity, tone, and flow, while the original tester verifies the technical accuracy of the content. With both satisfied, the review moves to our fantastic editing team, who ensure that the writing adheres to our publication's standards for quality, consistency, and style.

Recommendations

After the publication of each review, our writing team identifies soundbars with performance characteristics that make them potential contenders for inclusion in our recommendation articles. These curated lists give you an up-to-date shortlist of products tailored to specific uses or budgets, helping you navigate the often overwhelming number of options on the market.

While we make extensive use of our comprehensive database of measurements, we don't solely rely on numbers; we apply our knowledge of the user experience when making suggestions, considering practical factors like value proposition and additional features that may enhance your listening experience. This helps account for soundbars that excel in our technical measurements but fall short in real-world usability, or vice versa.

That said, our recommendation articles aren't meant to be the final word on which soundbar you should purchase. Rather, they provide a well-researched starting point for your decision-making process. We encourage you to use them as one element in your product research process.

Retests

After reviewing a soundbar, we typically retain it in our vast inventory of products so we can revisit it as its life cycle continues and our testing methods evolve. This approach allows us to maintain the relevance of our reviews over time, rather than letting them become static snapshots that quickly grow outdated.

Many factors can trigger a retest, ranging from a methodology update on our end to a firmware update from the manufacturer that changes performance or introduces new features. Community requests also play an important role in our retest decisions—perhaps to evaluate a specific configuration not covered in our initial review or to validate an issue reported by multiple users.

Once triggered, the retest process is essentially a streamlined version of the full review process, involving the tester, writer, and editorial team, all focused on the specific scope of the investigation. To maintain transparency, we leave a public message on the review explaining what was retested and why. If the results affect the product's performance or scoring, we update our review and adjust any related recommendation articles to reflect the most up-to-date information.

Conclusion

Well, that's all for the brief overview of our review process from product selection to publication. If you'd like to watch a video of our review pipeline, check out the video below. You can also find in-depth video reviews and recommendations on the RTINGS.com Audio YouTube Channel.

If you're building out your home theater, you might be interested in our companion piece, How We Test TVs, where we break down our equally meticulous approach to evaluating the visual side of your entertainment experience.