For the past decade, we've been publishing trustworthy reviews for various products, and now we've expanded our expertise to running shoes. We purchase every pair independently, with no cherry-picked samples or freebies from manufacturers. Our rigorous process involves multiple teams and experts, from purchasing to publishing. We do more than just run in these shoes; we use high-tech testing tools to measure various performance aspects with objective, data-driven results. As we continue to develop, we will add tests to our methodology. If you have suggestions for things you'd like to see us test, let us know in the comments.

Each running shoe review includes dozens of individual tests, taking many work days to complete. You're in the right place if you're wondering what goes into producing our running shoe reviews. Below, we'll break down how we deliver objective, data-driven reviews.

Product Selection

Before testing any running shoe, we first buy it. With hundreds of running shoes released each year worldwide, we can't test them all. Instead, we primarily purchase and test models available in the United States that we believe will be most relevant to runners based on popularity and requests. We're currently focusing on road running shoes, but plan to expand to more categories.

There are two primary ways we choose which shoes to buy:

- Popular, New, or Recommended Models: We monitor new releases from major brands like Nike, Adidas, Brooks, On, and New Balance, as well as smaller specialty brands. We also frequently refresh our recommendation articles, like 'Best Running Shoes,' with newly released models in each shoe category to always reflect the most relevant picks for runners. We also consider models that receive a lot of attention in online running communities.

- Voting Tool: Readers can use our voting tool to vote for the running shoes they want us to review next. Each user gets one vote every 60 days (or 10 votes for Insiders). If a model reaches 25 votes, we purchase and test it!

We don't accept review samples from manufacturers; instead, we purchase directly from Amazon, online shoe retailers, and local physical stores. Once they arrive, we document and photograph them before sending them off for testing.

Our Philosophy

We provide authentic product reviews, free from bias or external influence, ensuring our users can trust our transparent and rigorous testing process to make informed decisions while maintaining editorial independence. Every shoe, from high-end super shoes to budget daily trainers, undergoes the same thorough evaluation without influence from brands or advertising.

This principle guides how we test every running shoe. Price and expected performance don't influence our scoring; if an expensive shoe underperforms compared to a cheaper one, it won't receive a higher score based on brand or cost.

Testing

Before we get into the specifics, let's discuss why and how we test shoes. After unboxing and photographing a pair, we pass it to a dedicated tester. We rely on standardized procedures for every review, so you can compare a budget trainer and a premium carbon-plated racer on the same scale: price and marketing claims aren't factored into our scores. We also continuously refine our methodology to stay current with new tech.

Our initial Test Bench 0.8 introduced rigorous tests for energy return, stability, and cushioning. As we expanded the testing pool, we identified edge cases where results didn't fully match real-world performance. This led to Test Bench 0.8.1, where we refined the methodology and added new tests to improve the lateral stability and marathon racing usages. With Test Bench 0.8.2, we improved the compression tests by adjusting the impact force range and loading pattern to more accurately replicate real-world running.

As we update our process, we'll continue to retest shoes so our findings stay relevant. That said, results from different test benches might not be directly comparable. We see our tests as just a starting point; if runners online raise specific questions, we investigate further. Our standardized methodology is structured into two test categories: Design and Performance.

| Design | Performance | |

|---|---|---|

| Weight | Heel Energy Return | Forefoot Energy Return |

| Shape | Heel Cushioning | Forefoot Cushioning |

| Stack Heights | Heel Firmness | Forefoot Firmness |

Design

You might wonder how exactly we can "test" a shoe's design. We don't really judge style or aesthetics; we focus on measurable aspects of a shoe's build so you can decide if it'll fit well on your feet and meet your running needs. These measurements are specific to our test size (a men's US size 9). Shoe weight, shape, and stack heights can vary between sizes or even between men's and women's versions, so keep that in mind when comparing our results to your own shoe size. Below, we break down the three major design factors we measure: Weight, Shape, and Stack Heights, so you can easily see how a shoe stacks up against others on the market.

Weight

We weigh each shoe (with laces and insole) multiple times using a scientific scale, ensuring we capture an accurate reading for both the left and right shoe in the same size. We then use the average weight to compare different models. This is important because weight differences between shoes (such as a super shoe versus a daily trainer) can impact overall running efficiency.

Shape

We examine each shoe's construction, both internally and externally, to understand its overall fit and shape. Using the Artec Space Spider 3D scanner technology, we visualize the upper and outsole to assess how the design accommodates various foot types. Additionally, by measuring internal dimensions such as heel, arch, and forefoot width, we provide insights into whether the shoe will feel snug, spacious, or in between. Also, outsole width is measured and compared to the stack height, with this ratio used as part of our evaluation of the shoe's lateral stability.

Stack Heights

We examine the thickness of the cushioning by measuring the shoe's midsole at key points along the heel and forefoot. This tells us how much material stands between your foot and the ground, which can significantly influence how the shoe feels on the run. Following World Athletics guidelines, we use a caliper to measure these stack heights while the shoe rests on a flat surface. It's worth noting that World Athletics regulates maximum stack heights, which are mostly only relevant for elite marathon runners. We recommend consulting their current guidelines on approved and banned shoe models for the latest information. From these measurements, we also calculate the heel-to-toe drop, an important spec that affects running mechanics and can guide you toward a shoe that matches your preferred running style and strike pattern.

Other Features

In addition to these attributes, we assess other design elements crucial to performance. The tongue gusset type and the presence of a plate are important since they impact fit and lateral stability during runs. We evaluate the tongue design by determining whether it's fully gusseted, semi-gusseted, or non-gusseted. When examining plates, we specify materials such as carbon fiber, plastic, or fiberglass alongside design variations like rods or shanks. We also consider any additional unique design aspects or community feedback impacting overall performance.

Performance

To evaluate a running shoe's performance, we rely on a set of standardized mechanical tests that allow us to objectively measure how it behaves under real-world running forces. While the feel and fit are important, our performance tests focus on what the shoe actually does: how it compresses and rebounds under stress. These results help runners understand how a shoe feels out of the box and how it will behave under their specific stride and pace. For a much deeper dive into this process (including data breakdowns, force curves, and mechanical insights), you can check out our full Compression Testing & Energy Return Research article. It walks through how we built the testing setup, how the results are interpreted, and how this data connects to what you actually feel on the run.

Compression

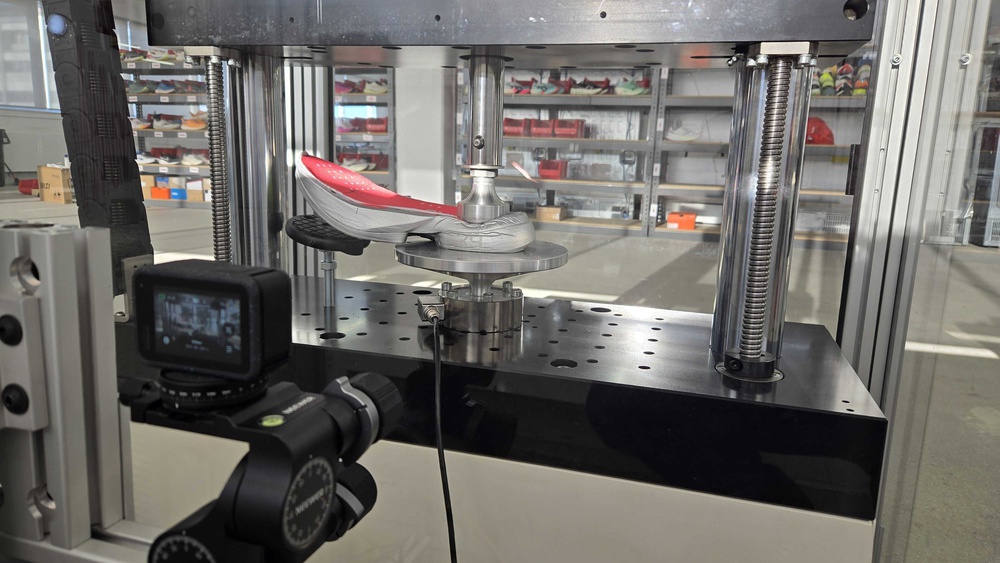

One of the core elements we measure is compression: how much and where a shoe compresses under force. This helps determine how cushioned the shoe feels during loading and landing. We use a universal testing machine with an electrodynamic linear actuator and a load cell at settings designed to simulate the shape and pressure of a human foot striking the ground repeatedly.

We test all shoes across a consistent force range (from 550N to 1950N at the heel and from 800N to 2100N at the forefoot) to reflect the variety of real-world running scenarios. Since forces rise linearly with weight and pace, this range helps us capture how the midsole behaves under both light and heavy loads. We use the full force range to calculate an overall cushioning score based on the average compression across that spectrum. To account for different strike patterns and the varying densities or layering of foams, we also split our measurements into heel and forefoot compression. This distinction helps reveal how the shoe responds depending on where a runner lands, whether you're a heel striker, midfoot striker, or forefoot striker. In general, lighter forces are more representative of smaller runners or slower paces, while higher forces simulate heavier runners, faster efforts, and more forceful landings. This approach ensures our results are relevant to a wide range of running styles and body types.

Energy Return

We also evaluate how efficiently the shoe's foam rebounds after compression, commonly called energy return. This indicates how much energy is given back to the runner during decompression, directly influencing how responsive and efficient the shoe feels, especially at faster paces.

We can determine how much of the applied energy is returned with each step by testing how the foam deforms and recovers under a full compression cycle. This makes it easier to identify shoes that feel bouncy and fast versus those that prioritize impact absorption. We test this across a consistent force range (from 550N to 1950N at the heel and from 800N to 2100N at the forefoot, just like compression) since energy return can vary depending on how hard you land, where your foot strikes, and pace. We also measure energy return separately at the heel and forefoot to reflect differences in strike patterns and foam placement. This allows us to capture how different zones of the midsole behave, particularly in shoes with dual-density foams or midsole designs that are more energetic in one area than another.

Firmness

We also evaluate firmness, which describes how soft or hard the midsole feels underfoot across different levels of force (from 550N to 1950N at the heel and from 800N to 2100N at the forefoot). This is particularly important because preferences can vary: some runners want a soft, plush ride for comfort, while others prefer a firmer platform that enhances lateral stability and provides a snappier feel in the forefoot.

We test firmness at the heel and forefoot using a linear actuator paired with a load cell, compressing the shoe up to 2100 newtons. This range allows us to simulate different body weights, foot strike patterns, and running intensities. Because firmness can change depending on how hard and where you land, this test helps us provide a more complete picture of how the shoe will feel for different runners. Like other performance tests, we break down firmness results by heel and forefoot to account for shoes with dual-density foams and strike patterns.

Real-World Testing

The mechanical tests give us objective, repeatable data, but we also believe running in the shoes is essential. Our in-house testers wear each model we test for a significant number of miles across a range of paces, conditions, and workouts. This real-world usage helps us verify that the lab data matches what runners actually experience on the road. To keep things consistent, our tester always uses one dedicated pair of each shoe for lab testing and a separate pair for logging miles in.

To stay closely aligned with the actual experience of running in each model, we also maintain an internal pool of testers from across departments who borrow shoes and provide structured feedback. We're not looking for isolated anecdotes or one-off opinions; we look for patterns and consensus. When multiple testers experience the same sensation or issue, and it aligns with our lab data, that insight gets integrated into the review. This combination of hands-on testing and data validation helps us catch any discrepancies and deliver a well-rounded, accurate picture of a shoe's performance.

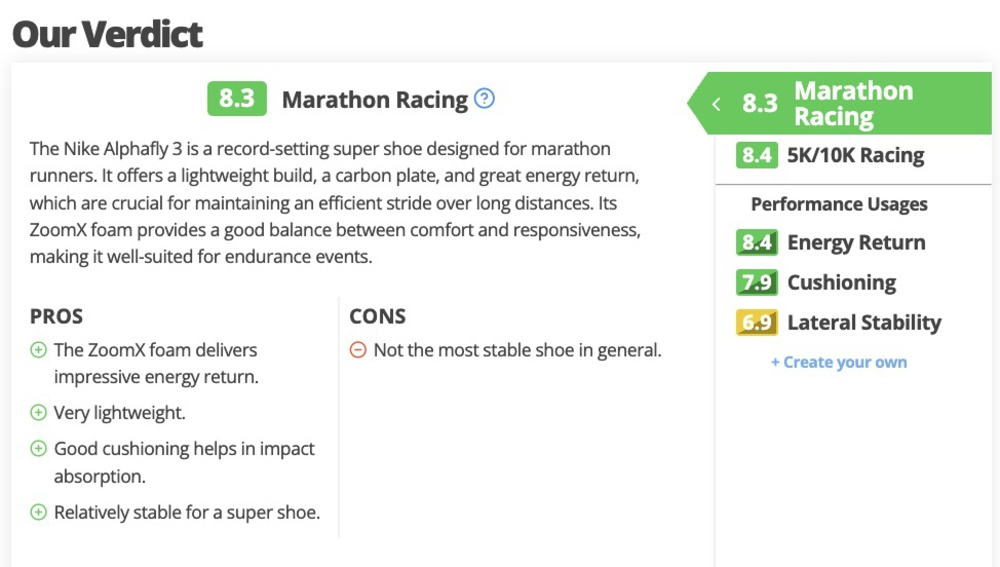

Writing

Once testing is complete, the writing process begins. We aim to take complex lab data and translate everything into a clear, helpful review that reflects how the shoe performs for different types of runners.

The writer's job starts with reviewing all test results and tester notes. If anything is unclear or unexpected, the writer works directly with the tester to validate the findings and ensure everything lines up. If something doesn't add up, we'll pause and recheck the testing before moving forward. Transparency is key; every claim we make in the review is backed by data and widely observed consensus from testing.

We also take the time to understand the shoe's place in the market. Before writing begins, the writer researches where the model fits in the brand's lineup, whether there are other versions or variants (like wide versions), and how it compares to other shoes we've tested, both direct competitors and past iterations.

Writing itself typically takes 1–2 days, followed by a structured peer-review process involving the original testers and a second writer. This process ensures that all the information is accurate, the conclusions are fair, and the review stays free of bias. Once the peer review is complete, the article goes to our editorial team for a final pass. They edit all reviews (and other content we publish) to ensure they follow our internal style guides and guidelines. They catch any inconsistencies, identify unclear language and formatting issues, and ensure that our writing meets the expected standard.

Overall, each running shoe review is the result of collaboration between test engineers, testers, writers, photographers, and editors.

Rewrites and Updates

Publishing a shoe review isn't the end of the process; it's part of a longer lifecycle. We keep every pair of running shoes we test, storing them in our lab so we can revisit models when needed. This gives us the flexibility to update reviews when our test methodology evolves, when community questions arise, or when we want to compare new models to previous versions on equal footing.

As we continue to introduce updates to our testing bench, we'll go back and retest older models so that scores remain consistent and directly comparable. We don't retest every aspect of the shoe, only the relevant parts that are affected by the update.

Recommendations

After we've tested a shoe and published the review, we take a step back and consider whether we should include it in one of our recommendation articles, our curated best-of lists designed to help runners find the right pair for their needs. These articles are updated regularly, but when a standout model comes along, we'll often update a guide immediately to reflect its inclusion.

When deciding which shoes to recommend, we focus primarily on performance and how each model fits within the broader running shoe landscape. However, we also consider a few practical factors. If two shoes perform similarly but one is significantly cheaper without meaningful trade-offs, we'll favor the more accessible option. We also avoid recommending shoes that are nearly impossible to find; availability matters, and we aim to recommend models that runners can actually buy.

Our recommendation writers are the same people who write the reviews, so they understand the data and the context behind it. If a shoe has strengths that don't show up directly in our scores, they'll highlight those features.

These recommendations are built to serve most runners but aren't one-size-fits-all. If the listed models don't quite match your preferences, you can explore all our data using tools like the comparison tool, filterable tables, or our 3D shape compare tool to make your own personalized choice. Our goal is to give you the clearest possible picture of what each shoe can do so you can confidently find the one that's best for your needs.

Conclusion

When you see our detailed and trustworthy running shoe reviews, there's a lot of work behind them. From unpacking and photographing to testing, writing, and editing, each review goes through a structured, unbiased process to ensure consistency and accuracy. This article is just a quick overview of how we test running shoes. To learn more about our specific methods, check out our research articles.