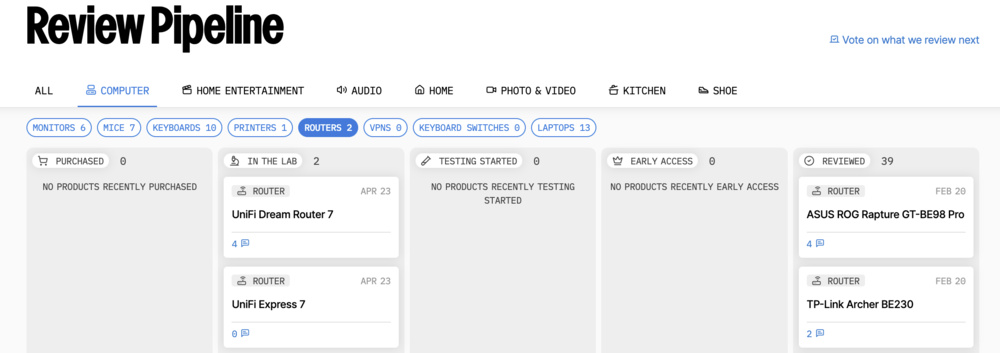

Since we started reviewing them in 2024, we've tested over 35 routers. We maintain complete editorial independence and impartiality by purchasing each router ourselves, which ensures we aren't testing specially selected review units. Rather than casually assessing performance by throwing on Netflix and seeing if it works, we rigorously evaluate each router on the same standardized testing platform, allowing accurate comparisons between different models.

Our review pipeline involves a dedicated team of experts and is more comprehensive than you might expect. In this article, we'll clearly demonstrate our process so you can confidently trust our results. This includes how we select and buy routers, conduct standardized performance tests, and develop our reviews. From beginning to end, this thorough process can span several days to multiple weeks.

Product Selection

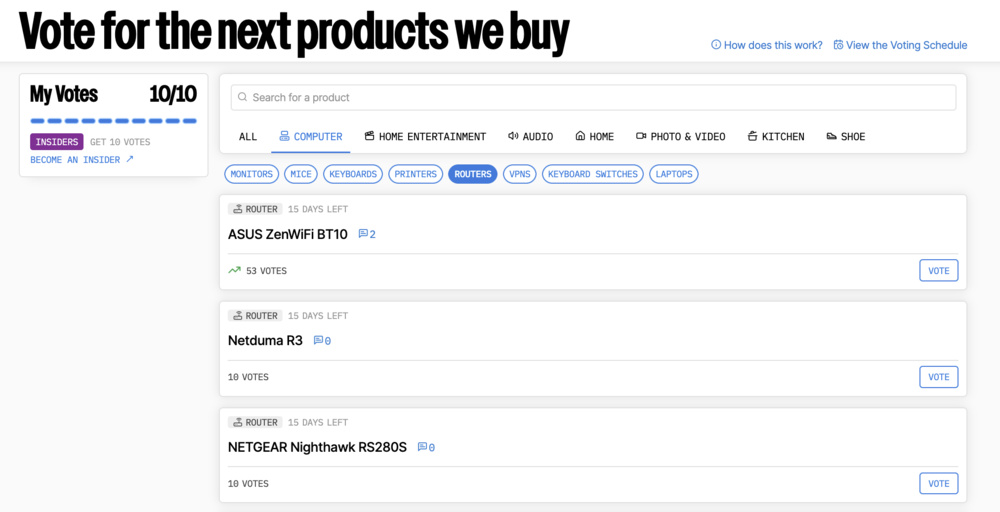

We largely select which routers to review based on how popular they are or how popular we expect them to be. To do this, we analyze search trends and pay close attention to which products people are talking about online. We'll also sometimes buy and test products from specific categories, like budget or mesh models, so that we have a larger selection of products from which to make a recommendation. While we try to buy major releases when they drop, we also rely on input from you and the wider community of enthusiasts online to help guide our choices.

The most direct way that you can help us choose what to review next is by voting in our poll. We restart our polls every 45 days and buy the product that receives the most votes within each cycle. You can also email us at feedback@rtings.com or drop a comment in our forums if there's a particular router you'd like us to review.

How We Buy Products

We buy every product we test just like you would. We don't accept review samples from brands and do our best to purchase anonymously from major retailers. This way, we avoid cherry-picked samples that could excel in our test bench and skew our results. The trade-off is that we don't get products as quickly as other reviewers who receive early samples and often publish their reviews when a router goes on sale.

Standardized Tests

Every router we test undergoes the same testing process, so you can see how they perform on an even playing field. Testing products this way allows you to use our specialized tools, like our side-by-side comparison and custom table tool, to best determine which router suits your specific needs.

We're always looking at ways to improve our testing to reflect new changes in technology and feedback from the community. The result is an iterative approach to our test benches that changes over time. You can track all the changes we introduce to our test bench in our changelogs. However, this approach also means that when we update our methodology, some of the older, less relevant products are left behind on older test benches. As time passes, depending on changes to our future test benches, comparisons to older products may be less applicable, but this remains an important aspect of how we strive to improve and innovate.

Design

The first section of the review is fairly straightforward, covering basic physical information about the router, like its dimensions, how many networking and USB ports it has, what comes included in the box, and whether or not it has a wall/ceiling mount.

Wi-Fi

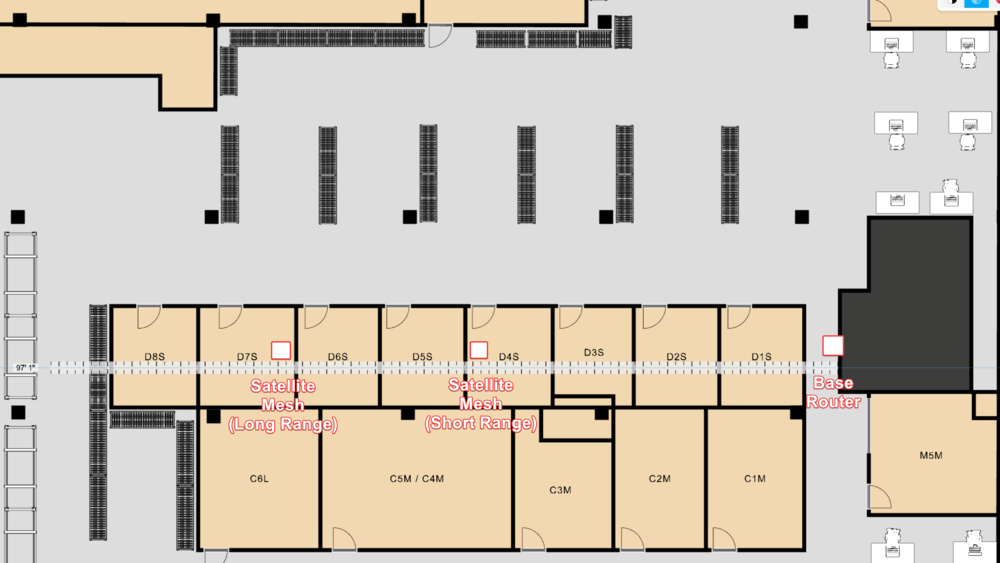

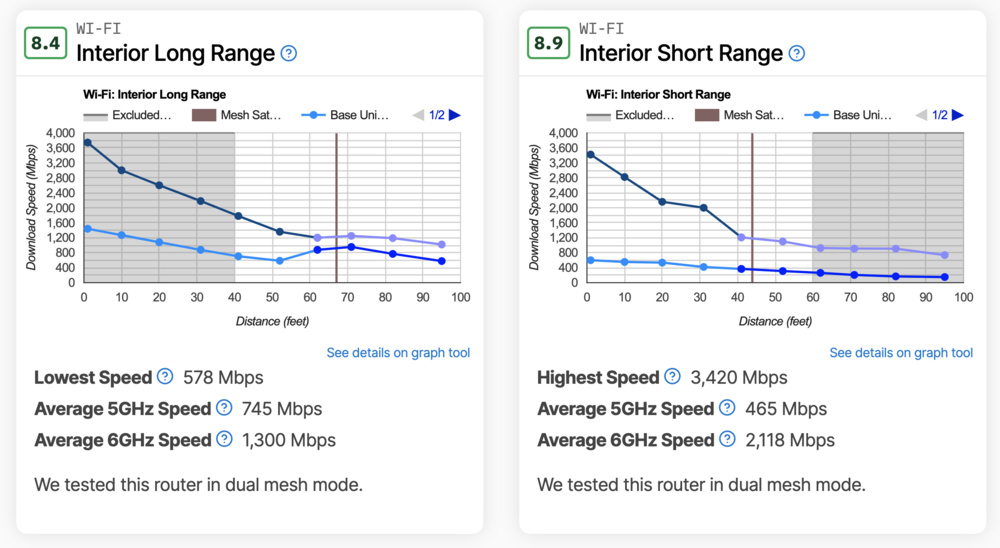

This section is where we evaluate the router's wireless performance with two main tests: Interior Long Range and Interior Short Range. For both tests, we set up the router and connect its WAN port to an iperf3 server, which we host locally. We then use a laptop running Linux equipped with an Intel BE200 Wi-Fi 7 card to measure speeds. We take measurements every ~10 feet from room D1S to past room D8S, 95 feet away. We test on both the 5GHz and 6GHz bands if the router we're testing support it. If we're testing a mesh system, we set up the satellite access point 44 feet away in room D4S for the short-range test and 67 feet away in room D7S for the long-range test.

The long-range test intends to evaluate how the router or mesh system performs at large distances, like in big, multi-level homes. It's scored based on the lowest measured speed overall since that can give an idea of the effective range before the speeds become unusable, along with the average speeds from 40 to 95 feet. The short-range test is designed to show how fast the router is at shorter distances, so it's scored based on the fastest speed the router achieved and the average speeds from 1 to 60 feet.

Finally, we publish and score the Wi-Fi specifications of the router, and score routers with newer versions (like Wi-Fi 7), DFS (dynamic frequency selection), and a 6GHz band higher.

Writing

Once testers finish testing a router, the first peer-review phase begins. During this stage, writers and testers collaborate closely to validate test results. Although not directly visible in the final review, this step ensures accuracy and quality. We cross-check our findings with comparable routers, our own expectations, industry knowledge, and community feedback. If any questions arise, testers perform additional testing until we reach a consensus. This phase can last from several minutes to a few days, depending on complexity. We then release early access test results to insiders and start drafting the full review.

Each review opens with an introduction that covers standout features and key selling points. We then provide detailed sections tailored to specific use cases, illustrating how the router performs in various scenarios, like in a small apartment or a large, multi-level home. Comparative sections highlight feature and variant differences, as well as how the router fits into the wider market.

Although our test results aim to be clear and straightforward, we provide supplementary context to clarify complex outcomes or unusual findings, including comparisons and technical details when necessary.

After completing the first draft, a second peer-review phase takes place. Another writer looks over the review for clarity, readability, and accuracy, while the original tester reconfirms all technical statements to ensure accuracy.

Lastly, the review is sent to our editing team for a thorough check of formatting, consistency, and adherence to internal style guidelines. Their careful review guarantees a polished, error-free final product that meets our high-quality standards.

Recommendations

Our thorough testing and individual product reviews directly inform our router recommendations. These curated lists are designed to help you choose the right router; we continuously monitor these selections and update them as needed. We consider more than just the highest scores—pricing, availability, user satisfaction, buying experience, customer support, and community-reported reliability issues also significantly influence our choices.

Keep in mind, these recommendations are intended as guidance rather than absolute rankings suitable for every individual. They're tailored primarily for users who aren't experts or those looking for a reliable starting point in their research for the ideal router.

Retests

We keep the products we test for as long as they're relevant and widely available for purchase, and often even longer. Our writing, testing, and test development teams collaborate to decide which products to keep and which we can safely resell. We typically hold on to products featured in our recommendation articles, those expected to remain popular despite their age, and occasionally, noteworthy products we may need to use as references when updating our test bench.

Keeping a large number of routers in our office lets us retest them whenever needed. We might retest a router for various reasons, such as responding to community requests about performance issues being reported that show up over time, or firmware updates that add new functionality or promise performance improvements.

This process looks like our review testing, but on a smaller scale. Testers perform the retests, and writers and testers collaborate to validate them. Writers then take over again and update the affected text with context of our new results. After our editing team validates the work of our writers, we publish a new version of our review. For full transparency, we leave a public message to address what changes we made, why we made them, and which tests were affected.

Videos and Further Reading

If you want to see what our review pipeline looks like, check out the video below.

We also produce in-depth video reviews and recommendations using a similar pipeline of writers, editors, and videographers with multiple rounds of validation for accuracy at every turn. For mouse, keyboard, and monitor reviews in video form, see our dedicated RTINGS.com Computer YouTube channel.

For all other pages on specific tests, test bench version changelogs, or R&D articles, you can browse all our router articles.

How to Contact Us

Constant improvement is key to our continued success, and we rely on feedback to help us. We'd encourage you to send us your questions, criticisms, or suggestions anytime. You can reach us in the comments section of this article, anywhere on our forums, on Discord, or by emailing feedback@rtings.com.