Products

LG 27GS95QE-B

ASUS ROG Strix OLED XG27AQDMG

Tested using Methodology v2.1.1

Updated Nov 07, 2025 02:10 PM

SEE PRICE

Amazon.com

Tested using Methodology v2.1.1

Updated Nov 19, 2025 07:44 PM

SEE PRICE

Amazon.com

Max Refresh Rate

240 Hz

Size

27"

Panel Type

OLED

Native Resolution

2560 x 1440

Max Refresh Rate

240 Hz

Size

27"

Panel Type

OLED

Native Resolution

2560 x 1440

Our Verdict

LG 27GS95QE-B

ASUS ROG Strix OLED XG27AQDMG

The LG 27GS95QE-B and the ASUS ROG Strix OLED XG27AQDMG are very similar 27-inch OLED gaming monitors. The ASUS is a better option if you prefer the vividness of a glossy screen. The ASUS is also a better choice if you find VRR flicker distracting, as it has a setting to reduce this, though it causes some stutter. However, the LG is better if you prefer a more consistent display in different lighting conditions with its matte screen. It's also a better choice if you plan to use the Xbox Series X|S with your monitor, as it's more compatible with that console.

Variants

- 27GS95QE-B (27")

- ROG Strix OLED XG27AQDMG (27")

- ROG Strix OLED XG27AQDMGZ (27")

Check Price

27"

27GS95QE-B

SEE PRICE

Amazon.com

27"

ROG Strix OLED XG27AQDMG

SEE PRICE

Amazon.com

27"

27GS95QE-B

SEE PRICE

BestBuy.com

27"

ROG Strix OLED XG27AQDMGZ

SEARCH

Amazon.com

27"

27GS95QE-B

SEE PRICE

LG.com

27"

27GS95QE-B

SEARCH

B&H

27"

27GS95QE-B

SEARCH

Walmart.com

Main Differences for

PC Gaming

PC Gaming

Threshold

PC Gaming

9.0

9.0

Full Comparison

Design

Style

Design Picture

Compare High-Res

Compare High-Res

Curved

No

No

Curve Radius

Not Curved

Not Curved

Build Quality

8.5

8.5

Build Quality Picture

Compare High-Res

Compare High-Res

Ergonomics

7.7

8.7

Ergonomics Picture

Compare High-Res

Compare High-Res

Back Picture

Compare High-Res

Compare High-Res

Height Adjustment

4.3" (11.0 cm)

4.5" (11.4 cm)

Min Height To Top Of Panel

18.3" (46.5 cm)

15.1" (38.4 cm)

Tilt Range

-15° to 5°

-15° to 5°

Rotate Portrait/Landscape

Yes, Counter Clockwise

Yes, Both Ways

Swivel Range

-10° to 10°

-45° to 45°

Wall Mount

VESA 100x100

VESA 100x100

Stand

Stand Picture

Compare High-Res

Compare High-Res

Thickness Picture

Compare High-Res

Compare High-Res

Base Width

21.0" (53.4 cm)

10.6" (26.8 cm)

Base Depth

11.8" (30.0 cm)

8.6" (21.8 cm)

Thickness (With Display)

8.9" (22.6 cm)

6.6" (16.8 cm)

Weight (With Display)

16.8 lbs (7.6 kg)

14.6 lbs (6.6 kg)

Display

Display Picture

Compare High-Res

Compare High-Res

Size

27"

27"

Housing Width

23.8" (60.5 cm)

23.9" (60.6 cm)

Housing Height

14.1" (35.8 cm)

14.5" (36.9 cm)

Thickness (Without Stand)

1.9" (4.7 cm)

2.2" (5.6 cm)

Weight (Without Stand)

11.9 lbs (5.4 kg)

9.2 lbs (4.2 kg)

Borders Size (Bezels)

0.4" (1.0 cm)

0.4" (1.0 cm)

Controls

Controls Picture

Compare High-Res

Compare High-Res

In The Box

In The Box Picture

Compare High-Res

Compare High-Res

Power Supply

External Brick

External Brick

Picture Quality

Contrast

10

10

Checkerboard Picture

Compare High-Res

Compare High-Res

Native Contrast

Inf : 1

Inf : 1

Contrast With Local Dimming

Inf : 1

Inf : 1

Local Dimming

10

10

Local Dimming Video

Local Dimming

No

No

Backlight

No Backlight

No Backlight

SDR Brightness

7.3

7.9

Real Scene

271 cd/m²

334 cd/m²

Peak 2% Window

335 cd/m²

452 cd/m²

Peak 10% Window

338 cd/m²

448 cd/m²

Peak 25% Window

340 cd/m²

452 cd/m²

Peak 50% Window

317 cd/m²

336 cd/m²

Peak 100% Window

261 cd/m²

274 cd/m²

Sustained 2% Window

332 cd/m²

447 cd/m²

Sustained 10% Window

334 cd/m²

445 cd/m²

Sustained 25% Window

338 cd/m²

448 cd/m²

Sustained 50% Window

314 cd/m²

335 cd/m²

Sustained 100% Window

260 cd/m²

273 cd/m²

Automatic Brightness Limiting (ABL)

0.017

0.034

Minimum Brightness

18 cd/m²

30 cd/m²

HDR Brightness

6.9

7.3

EOTF

Compare High-Res

Compare High-Res

VESA DisplayHDR Certification

DisplayHDR TRUE BLACK 400

DisplayHDR TRUE BLACK 400

Real Scene

439 cd/m²

504 cd/m²

Peak 2% Window

620 cd/m²

1,151 cd/m²

Peak 10% Window

620 cd/m²

760 cd/m²

Peak 25% Window

449 cd/m²

445 cd/m²

Peak 50% Window

324 cd/m²

329 cd/m²

Peak 100% Window

265 cd/m²

264 cd/m²

Sustained 2% Window

610 cd/m²

1,115 cd/m²

Sustained 10% Window

610 cd/m²

747 cd/m²

Sustained 25% Window

446 cd/m²

440 cd/m²

Sustained 50% Window

323 cd/m²

328 cd/m²

Sustained 100% Window

264 cd/m²

264 cd/m²

Automatic Brightness Limiting (ABL)

0.055

0.084

Gray Uniformity

8.7

8.9

50% Uniformity Picture

Compare High-Res

Compare High-Res

50% Std. Dev.

1.826%

1.322%

50% DSE

0.116%

0.115%

Black Uniformity

10

10

Native Black Uniformity Picture

Compare High-Res

Compare High-Res

Native Std. Dev.

0.168%

0.159%

Black Uniformity Picture With Local Dimming

Compare High-Res

Compare High-Res

Std. Dev. w/ L.D.

N/A

N/A

Color Accuracy (Pre-Calibration)

9.0

7.9

Pre Calibration Picture

Compare High-Res

Compare High-Res

Pre Gamma Curve Picture

Compare High-Res

Compare High-Res

Pre Color Picture

Compare High-Res

Compare High-Res

Picture Mode

sRGB

sRGB Cal Mode

sRGB Gamut Area xy

99.1%

106.8%

White Balance dE (Avg.)

1.79

3.44

Color Temperature (Avg.)

6,419 K

6,160 K

Gamma (Avg.)

2.16

2.44

Color dE (Avg.)

2.22

2.57

Contrast Setting

70

N/A

RGB Settings

50-50-50

Default

Gamma Setting

Default

Default

Brightness Setting

90

40

Measured Brightness

125 cd/m²

120 cd/m²

Brightness Locked

No

No

Color Accuracy (Post-Calibration)

8.8

9.1

Post Calibration Picture

Compare High-Res

Compare High-Res

Post Gamma Curve Picture

Compare High-Res

Compare High-Res

Post Color Picture

Compare High-Res

Compare High-Res

Picture Mode

sRGB

User Mode

sRGB Gamut Area xy

100.1%

105.9%

White Balance dE (Avg.)

2.53

0.61

Color Temperature (Avg.)

6,055 K

6,568 K

Gamma (Avg.)

2.19

2.19

Color dE (Avg.)

2.23

1.03

Contrast Setting

70

80

RGB Settings

49-49-47

100-100-100

Gamma Setting

Default

2.2

Brightness Setting

57

31

Measured Brightness

99 cd/m²

100 cd/m²

SDR Color Gamut

9.3

9.6

sRGB Color Gamut Picture

Compare High-Res

Compare High-Res

sRGB Coverage xy

98.0%

100.0%

sRGB Picture Mode

sRGB

User Mode

Adobe RGB Color Gamut Picture

Compare High-Res

Compare High-Res

Adobe RGB Coverage xy

86.9%

87.9%

Adobe RGB Picture Mode

Gamer 1

User Mode

HDR Color Gamut

9.2

9.0

Wide Color Gamut

Yes

Yes

DCI-P3 Color Gamut Picture

Compare High-Res

Compare High-Res

DCI-P3 Coverage xy

97.4%

96.3%

DCI-P3 Picture Mode

Gamer 1

Console HDR (100)

Rec. 2020 Color Gamut Picture

Compare High-Res

Compare High-Res

Rec. 2020 Coverage xy

70.6%

69.4%

Rec. 2020 Picture Mode

Gamer 1

Console HDR (100)

HDR Color Volume

7.8

8.0

DCI-P3 Color Volume ITP Picture

Compare High-Res

Compare High-Res

1,000 cd/m² DCI-P3 Coverage ICtCp

68.6%

82.0%

DCI-P3 Picture Mode

Gamer 1

Console HDR (100)

Rec. 2020 Color Volume ITP Picture

Compare High-Res

Compare High-Res

10,000 cd/m² Rec. 2020 Coverage ICtCp

33.0%

34.8%

Rec. 2020 Picture Mode

Gamer 1

Console HDR (100)

Viewing Angle

10

9.8

Viewing Angle Video

Chroma Graph

Compare High-Res

Compare High-Res

Color Washout From Left

70°

70°

Color Washout From Right

70°

70°

Hue Graph

Compare High-Res

Compare High-Res

Color Shift From Left

70°

48°

Color Shift From Right

70°

63°

Lightness Graph

Compare High-Res

Compare High-Res

Brightness Loss From Left

70°

70°

Brightness Loss From Right

70°

70°

Black Level Raise From Left

70°

70°

Black Level Raise From Right

70°

70°

Gamma Shift From Left

70°

70°

Gamma Shift From Right

70°

70°

Text Clarity

6.5

6.5

ClearType On

Compare High-Res

Compare High-Res

ClearType Off

Compare High-Res

Compare High-Res

Panel Type

OLED

OLED

Pixels

Compare High-Res

Compare High-Res

Subpixel Layout

RWBG

RWBG

Direct Reflections

8.4

5.4

Ring Light Picture

Compare High-Res

Compare High-Res

Direct Reflections Graph

Peak Direct Reflection Intensity

13.8%

46.7%

Screen Finish

Matte

Glossy

Ambient Black Level Raise

8.0

8.2

Black Level Raise Picture

Compare High-Res

Compare High-Res

Ambient Black Level Raise Graph

Black Luminance @ 0 lx

0.00 cd/m²

0.00 cd/m²

Black Luminance @ 1000 lx

0.92 cd/m²

0.81 cd/m²

Total Reflected Light

8.2

8.4

Bright Room Off Picture

Compare High-Res

Compare High-Res

Total Reflection Ring Light Picture

Compare High-Res

Compare High-Res

Total Reflected Light Intensity

14,180% ⋅ pixel

12,184% ⋅ pixel

Diffraction Artifacts

No

No

Gradient

9.6

8.5

Gradient Picture

Compare High-Res

Compare High-Res

Color Depth

10 Bit

10 Bit

Motion

Refresh Rate

8.0

8.0

Native Refresh Rate

240 Hz

240 Hz

Max Refresh Rate

240 Hz

240 Hz

Max Refresh Rate Over DP

240 Hz

240 Hz

Max Refresh Rate Over HDMI

240 Hz

144 Hz

Max Refresh Rate Over DP @ 10-bit

240 Hz

240 Hz

Max Refresh Rate Over HDMI @ 10-Bit

240 Hz

60 Hz

DSC Toggle

Yes

Yes

DSC Off Max Refresh Rate Over DP

240 Hz

240 Hz

DSC Off Max Refresh Rate Over HDMI

N/A

144 Hz

Variable Refresh Rate (VRR)

Variable Refresh Rate

Yes

Yes

FreeSync

Yes

Yes

G-SYNC

Compatible (NVIDIA Certified)

Compatible (NVIDIA Certified)

VRR Maximum

240 Hz

240 Hz

VRR Minimum

< 20 Hz

< 20 Hz

VRR Supported Connectors

DisplayPort, HDMI

DisplayPort, HDMI

VRR Motion Performance

9.9

9.9

Pursuit Photo VRR

Compare High-Res

Compare High-Res

OD Mode CAD Comparison

Recommended VRR OD Setting

No OD Mode

No OD Mode

Variable Overdrive Advertised

No

No

Avg. CAD

11

13

Best CAD

11

13

Worst CAD

11

13

Refresh Rate Compliance

9.7

9.8

Fast GTG Sequence

VRR Compliance

Compliance @ Max Hz

93%

93%

Compliance @ 120 FPS

95%

96%

Compliance @ 60 FPS

96%

97%

CAD @ Max Refresh Rate

9.9

9.9

Pursuit Photo At Max Refresh

Compare High-Res

Compare High-Res

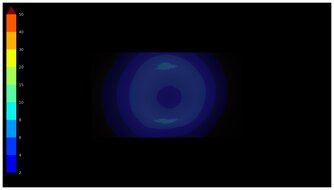

CAD Heatmap At Max Refresh

Compare High-Res

Compare High-Res

Recommended Overdrive Setting

No OD Mode

No OD Mode

Avg. CAD

11

13

Best 10% CAD

6

7

Worst 10% CAD

16

19

Response Time @ Max Refresh Rate

First Response Time

0.3 ms

0.3 ms

Total Response Time

0.3 ms

0.3 ms

Recommended Overdrive Setting

No OD Mode

No OD Mode

RGB Overshoot

0 RGB

0 RGB

Worst 10% RGB Overshoot

0 RGB

0 RGB

Worst 10% First Response Time

0.7 ms

0.4 ms

Worst 10% Total Response Time

0.7 ms

0.4 ms

Heatmap First Response

Compare High-Res

Compare High-Res

Heatmap Total Response

Compare High-Res

Compare High-Res

Heatmap RGB Overshoot

Compare High-Res

Compare High-Res

Response Time @ 120Hz

Worst 10% First Response Time

0.7 ms

0.4 ms

RGB Overshoot

0 RGB

0 RGB

Total Response Time

0.3 ms

0.3 ms

First Response Time

0.3 ms

0.3 ms

Recommended Overdrive Setting

No OD Mode

No OD Mode

Worst 10% Total Response Time

0.7 ms

0.4 ms

Worst 10% RGB Overshoot

0 RGB

0 RGB

120Hz First Response Heatmap

Compare High-Res

Compare High-Res

120Hz Total Response Heatmap

Compare High-Res

Compare High-Res

120Hz Heatmap RGB Overshoot

Compare High-Res

Compare High-Res

Response Time @ 60Hz

Recommended Overdrive Setting

No OD Mode

No OD Mode

First Response Time

0.4 ms

0.3 ms

Total Response Time

0.4 ms

0.3 ms

RGB Overshoot

0 RGB

0 RGB

Worst 10% Total Response Time

0.7 ms

0.4 ms

Worst 10% First Response Time

0.7 ms

0.4 ms

Worst 10% RGB Overshoot

0 RGB

0 RGB

60Hz First Response Heatmap

Compare High-Res

Compare High-Res

60Hz Total Response Heatmap

Compare High-Res

Compare High-Res

60Hz RGB Overshoot Heatmap

Compare High-Res

Compare High-Res

Backlight Strobing (BFI)

Backlight Strobing Picture

Compare High-Res

Compare High-Res

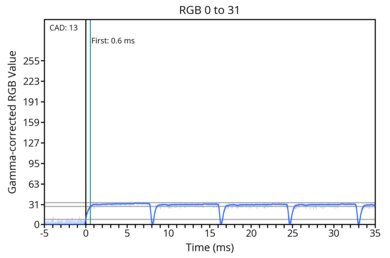

Backlight Strobing Frequency Picture

Compare High-Res

Compare High-Res

Backlight Strobing (BFI)

No BFI

Yes

Maximum Frequency

N/A

120 Hz

Minimum Frequency

N/A

120 Hz

Longest Pulse Width Brightness

N/A

132 cd/m²

Shortest Pulse Width Brightness

N/A

132 cd/m²

Pulse Width Control

No BFI

No

Pulse Phase Control

No BFI

No

Pulse Amplitude Control

No BFI

Yes

VRR At The Same Time

No BFI

No

VRR Flicker

5.9

4.7

VRR Flicker Video

VRR Flicker Graph

Compare High-Res

Compare High-Res

Dark Gray Flicker

4.2 RGB

6.3 RGB

Middle Gray Flicker

1.2 RGB

2.4 RGB

Light Gray Flicker

1.2 RGB

1.6 RGB

Image Flicker

10

10

Backlight Picture

Compare High-Res

Compare High-Res

Flicker-Free

No

No

PWM Dimming Frequency

0 Hz

0 Hz

Inputs

Input Lag

9.1

8.9

Native Resolution @ Max Hz

2.8 ms

2.6 ms

Native Resolution @ 120Hz

5.0 ms

6.3 ms

Native Resolution @ 60Hz

9.2 ms

14.1 ms

Backlight Strobing (BFI)

N/A

14.6 ms

Resolution

7.5

7.5

Native Resolution

2560 x 1440

2560 x 1440

Aspect Ratio

16:9

16:9

Megapixels

3.7 MP

3.7 MP

Pixel Density

109 PPI

111 PPI

PS5 Compatibility

9.3

7.1

PS5 Compatibility Photo

Compare High-Res

Compare High-Res

PS5 Compatibility Table

Compare High-Res

Compare High-Res

4k @ 120Hz

Yes

No

4k @ 60Hz

Yes

Yes

1440p @ 120Hz

Yes

Yes

1440p @ 60Hz

Yes

Yes

1080p @ 120Hz

Yes

Yes

1080p @ 60Hz

Yes

Yes

Xbox Series X|S Compatibility

9.3

7.1

XSX Compatibility Photo

Compare High-Res

Compare High-Res

XSX Compatibility Table

Compare High-Res

Compare High-Res

4k @ 120Hz

Yes

No

4k @ 60Hz

Yes

Yes

1440p @ 120Hz

Yes

Yes

1440p @ 60Hz

Yes

Yes

1080p @ 120Hz

Yes

Yes

1080p @ 60Hz

Yes

Yes

Inputs

Inputs 1

Compare High-Res

Compare High-Res

Inputs 2

Compare High-Res

Compare High-Res

DisplayPort

1 (DP 1.4)

1 (DP 1.4)

DisplayPort Transmission Bandwidth

No DisplayPort 2.1

No DisplayPort 2.1

Mini DisplayPort

No

No

HDMI

2 (HDMI 2.1)

2 (HDMI 2.0)

HDMI 2.1 Bandwidth

48Gbps (FRL 12x4)

No HDMI 2.1

Daisy Chaining

No

No

3.5mm Audio Out

Yes

Yes

Ethernet

No

No

HDR10

Yes

Yes

Dolby Vision

No

No

USB

USB-A Ports

2

2

USB-A Rated Speed

5Gbps (USB 3.2 Gen 1)

5Gbps (USB 3.2 Gen 1)

USB-B Upstream Port

Yes

Yes

USB-C Ports

0

0

USB-C Upstream

No USB-C Ports

No USB-C Ports

USB-C Rated Speed

No USB-C Ports

No USB-C Ports

USB-C Power Delivery

No USB-C Ports

No USB-C Ports

USB-C DisplayPort Alt Mode

No USB-C Ports

No USB-C Ports

Thunderbolt

No

No

macOS Compatibility

macOS Screenshot

Compare High-Res

Compare High-Res

Features

Additional Features

Speakers

No

No

RGB Illumination

Presets

Controllable

Multiple Input Display

No

No

KVM Switch

No

No

Smart OS

No

No

On-Screen Display (OSD)

OSD Picture

Compare High-Res

Compare High-Res

Check Price

27"

27GS95QE-B

SEE PRICE

Amazon.com

27"

ROG Strix OLED XG27AQDMG

SEE PRICE

Amazon.com

27"

27GS95QE-B

SEE PRICE

BestBuy.com

27"

ROG Strix OLED XG27AQDMGZ

SEARCH

Amazon.com

27"

27GS95QE-B

SEE PRICE

LG.com

27"

27GS95QE-B

SEARCH

B&H

27"

27GS95QE-B

SEARCH

Walmart.com

LG 27GS95QE-B

ASUS ROG Strix OLED XG27AQDMG

Comments

LG 27GS95QE-B vs ASUS ROG Strix OLED XG27AQDMG: Main Discussion

What do you think of these products? Let us know below.

Looking for a personalized buying advice from the RTINGS.com experts? Insiders have direct access to buying advice on our insider forum.

Anyone had the two at the same time? I have 27GS95 since few days but as I can see from comparing 27’‘ OLEDs in the table at rtings there is no other monitor with a significantly higher brightness than the LG. Only the XG27AQDMG is almost equal to the LG in HDR.