See the previous 1.9 changelog.

See next 1.11 changelog.

Goal

With our 1.10 test bench update, we wanted to address a few long-standing issues with our Gradient and Audio Passthrough tests. Our Gradient test has been completely overhauled to better reflect real-world usage, and it's now done in HDR, which is where proper gradient handling is the most important. Many of you also pointed out that we weren't testing the processing capabilities of TVs, so we've added a new test, Low-Quality Content Smoothing, as a first step toward evaluating a TV's processing performance. As the name suggests, this test looks at how well a TV can smooth out rough areas in low-quality content without removing fine details. For all of these tests, we also wanted to remove the possibility of different sources impacting our results. To achieve that, we're switching from using Windows PCs to using a Murideo Seven Signal Generator. This signal generator is an industry-standard toolkit used by many TV reviewers and calibrators. It allows for finer control of the signals we're sending to a TV—ensuring we know exactly what we're testing and eliminating the possibility for the source to adjust the test signal. After all, for all of our tests, we want to know what the TV can do, not what the source is doing.

Summary Of Changes

Below is a summary of the changes we've made in this update. More detailed information on the changes made to each test is available below this table.

| Test Group | Change |

|---|---|

| Gradient |

|

| Low-Quality Content Smoothing |

|

| Audio Passthrough |

|

Issues with our test

There were a few issues with our tests. The test image we were using was mastered in 1080p, so the TV's upscaling could cause unnecessary banding depending on how the TV upscaled the image. Even TVs with good upscaling performance could cause banding by blending the gradient lines. The source we were using to display the test image on the TV was also an issue. We used a Windows PC to send the test image to the TV, and we discovered that the results sometimes changed from one PC to another and even between different versions of the video drivers. The same image on the same TV could look completely different from a laptop with an older graphics card compared to a more recent computer with a graphics card from a different brand. There was also an issue with the signal we were sending from the PC, as we used limited color bandwidth (16-235), which made it more likely for banding to occur. This also shows that gradient performance with real content is heavily dependent on what the source does with the image. We want to test the native capabilities of the display itself—not what the source does to the signal, so we need to move to a source that will deliver consistent results.

There were also issues with the scores and results presented in our review. The photo didn't always match the results presented, as the score was calculated from a test video and colorimeter, which didn't always look the same as the captured photo. This means the posted standard deviations also don't always match the photo. The other results of the test are also misleading. We report the color depth, but it created more questions than anything, as we didn't differentiate between the native bit depth of the panel and the maximum color depth it could display.

The original test was done in SDR, where gradient performance isn't as important, and it's easier for TVs to achieve good gradient performance in SDR than in HDR. HDR requires a higher color depth, and it commonly uses wide color gamuts like DCI-P3 or Rec. 2020, so it's more challenging for TVs to deliver a proper HDR gradient. Take the examples below; in SDR, the TCL 4 Series/S446 2021 does a good job, and gradients are displayed well, with very little banding. In HDR, on the other hand, the 50-100% red band blends in at the right, and it's displaying closer to 50% red when it should be displaying 100% red. Compare that with the Sony X95K, where gradients are displayed much better, and the color blending isn't noticeable.

Improving our test - what we tried and what issues we encountered

Our first step was to find an ideal test pattern and an ideal way to send the image to the TVs. We knew we wanted to use the Murideo signal generator to eliminate potential differences between source PCs. The Murideo came with a few built-in test patterns that we could have used, but none covered all three colors and the grayscale gradient in a single pattern, so to simplify the test, we decided to make our own.

Using Photoshop, we created new gradient test patterns in both 4k and 8k native resolutions, so we can eliminate upscaling as a possible source of banding. We used the "Classic" gradation mode, as it delivers the most linear gradation, and coded eight separate lines:

- Line 1: 100% black to 50% gray

- Line 2: 50% gray to 100% white

- Line 3: 100% black to 50% red

- Line 4: 50% red to 100% red

- Line 5: 100% black to 50% green

- Line 6: 50% green to 100% green

- Line 7: 100% black to 50% blue

- Line 8: 50% blue to 100% blue

We considered adding separate lines covering cyan, magenta, and yellow (CMY), but decided not to. Since TVs don't have CMY subpixels, those colors are produced by combining the other colors, so covering red, green, and blue will also directly show how CMY would look. We also validated the resulting test patterns using our Canon DP-V2411 reference monitor and confirmed there was no visible banding.

We encoded the Photoshop image with a 16-bit color depth and then converted that to a 10-bit, HDR10, RGB full-range video and uploaded it to our Murideo. This lets us directly control how the image is sent to the TVs, and we're now sending a full bandwidth signal. We could have sent a higher bit signal, but since most modern TVs only support 10-bit color, this would force the TV to process the image more and wouldn't necessarily match the user experience with real content. To validate our decision to use the Murideo, we also tried sending the image from three different computers to the same TV: an RTX-based NVIDIA desktop, a GTX-based NVIDIA laptop, and an AMD-based laptop. Even though each computer was set to display the same image, the result on the TV was completely different from one computer to the next. The most recent RTX computer was the worst of the three, and it couldn't display the picture properly, even on our reference monitor. Based on those results, we were confident in our decision to switch the source to the Murideo.

These changes also resulted in a change to our scoring. We now assign a subjective score for each of the eight lines mentioned above. The final box score is the weighted average of each line, with each line worth 12.5% of the final score. As a result of all the above changes, our scoring and the photo now much more closely represent the true user experience in native gradient handling on a TV, with all gradient smoothing features disabled.

The goal of the test - why does it matter?

Macroblocking and pixelation in low-quality content.

Macroblocking and pixelation in low-quality content.TVs have been improving rapidly in quality and processing features, but content availability hasn't changed much in recent years. A large number of people still watch low-quality content from streaming services and older physical media, and even high-quality streaming services still have issues with certain content. Our current tests don't show how well a TV handles that low-quality content and issues like macroblocking and pixelation. Unlike most of our tests, which aim to test the native capabilities of the display, this time, we also want to test how effective a TV can be with advanced picture processing enabled.

The main goal of this test is to show how well a TV's processors can improve the appearance of this low-quality content. Even with good quality streaming sources, near blacks are often heavily compressed to save bandwidth—something anyone who watched season eight of Game of Thrones is very familiar with. This compression leads to significant blockiness in blacks, and we want to see if the TV's processing can smooth this out. It's not enough to just smooth out low-quality content; we also want to see how well the TV preserves fine details. Is the TV powerful enough to tell the difference between compression artifacts and actual fine details and not smooth those details out?

Creating our test - what we tried and what issues we encountered

To create this test, we first had to decide on the type of source content to use. We picked nine sample scenes and tested the smoothing capabilities of five TVs with each of these nine scenes and ranked their performance. We used the Sony X95K, LG A2 OLED, Samsung QN95B QLED, TCL 4 Series/S446 2021, and the Samsung TU8000. Except for one outlier, our preliminary rankings were consistent across all scenes, so we knew that the sample clip didn't matter that much. Below are the results for each sample image taken with the first five TVs we tested.

| TV | Airplane | Astronaut | Guy In The Dark | Hills | Lake | Neon | Sailboat | Sunset | Swimmer |

|---|---|---|---|---|---|---|---|---|---|

| LG A2 OLED |  |

|

|

|

|

|

|

|

|

| Samsung TU8000 |  |

|

|

|

|

|

|

|

|

| Samsung QN95B QLED |  |

|

|

|

|

|

|

|

|

| Sony X95K |  |

|

|

|

|

|

|

|

|

| TCL 4 Series/S446 2021 |  |

|

|

|

|

|

|

|

|

We planned on including a before and after picture, so you can see how effectively each TV was smoothing out banding. After running the preliminary test with the first five TVs, we discovered that the "before" picture was remarkably similar between TVs, so we decided to drop it.

We decided to use the man in a dark room sample clip, as it has a large near-black area in the scene that is more representative of the types of content that cause issues for most users. We also chose this clip as the content is 1080p, which makes it more susceptible to banding and stress tests a TV's ability to smooth out banding. At the same time, this also limits the amount of work the TV has to do to upscale the image.

We started with a very high-quality clip and re-encoded it in Handbrake at a low-quality setting. We use a PC to display the video on the TV in SDR, pause it on frame 150, subjectively rate the overall smoothing done by the TV, and check for any lost details in the man's face. The final subjective score is made up of a weighted average of our two subjective scores, with 70% of the score coming from the overall smoothing. A TV with no smoothing features that displays the video exactly as-is would score a 3, as there would be no fine details lost. A perfect TV would be one that can perfectly smooth out the black areas of the scene without losing any fine details.

Sony X95K - Low-quality content is smoothed out well and fine details are preserved

Sony X95K - Low-quality content is smoothed out well and fine details are preserved Vizio V Series 2022 - Decent preservation of fine details, but very little smoothing

Vizio V Series 2022 - Decent preservation of fine details, but very little smoothingAbove, you can see two examples of our new low-quality content smoothing. Sony is well-known for their TV processing algorithms, and this example shows why. The Sony X95K does an excellent job with low-quality content. There's very little loss of fine details in the man's face and neck, and the compression artifacts in the dark areas of the screen are smoothed out well. Blacks don't look blocky, and there's very little banding.

The Vizio V Series 2022 does almost no smoothing. There's no loss of fine details, but there's significant banding in dark areas of the scene. To the right, you can see the Sony X95K again, but this time with smoothing disabled. The image is very similar to the Vizio, confirming that the Vizio is hardly doing any smoothing at all.

During our initial test run with 16 TVs, we encountered a few surprising results. The LG NANO90 2021 performed better than the Sony X95K, which didn't match our expectations. The Sony A95K also scored better than the Sony X95K, even though they're advertised to use the same processor. Finally, we were surprised that the Amazon Fire TV was surprisingly good at reducing banding. We decided to run the new gradient and low-quality content smooth tests on six additional TVs to help confirm our findings.

We tested the Sony A80K OLED and compared those results to the Sony A95K OLED since they use the same processor. The results were nearly identical for the smoothing test, and the difference in gradient performance can be explained by the difference between WOLED and QD-OLED panels. Sticking with Sony, we also tested the Sony X90K to give us a comparison to the Sony X95K and the Sony X80K. We expected the X90K to perform about the same as the X95K since they use the same processor, and we expected both of those to perform better than the Sony X80K, which uses a lower-end, older processor. Again, our results were consistent and matched our expectations for those three TVs.

Moving on to LG, we wanted to confirm our results of the LG A2 OLED, so we tested the LG B2 OLED. Using the LG Service Remote, we entered the service menu to confirm that they both used the same processor. Like with the Sony TVs, our results were very similar, confirming our test methodology again. We added the LG NANO85 2021 to confirm our LG NANO90 2021 results and discovered something interesting. For all of these TVs, we're running the smoothing and gradient tests with our recommended settings. Although the NANO90 and NANO85 are similar TVs, the local dimming feature is very different on the NANO85, so our recommended setting was to turn it off. We were surprised to discover that disabling local dimming decreased the TV's ability to smooth out gradients. When we reran the test with local dimming enabled on the QNED85, it performed nearly identically to the NANO90.

Finally, we tested the Amazon Fire TV Omni Series to validate the results of the Amazon Fire TV 4-Series, as the 4-Series performed better than expected with these tests. The results were consistent, as these two TVs show very similar processing. The dark area of the scene looks a bit different on each TV, but this is almost entirely due to the uniformity of each panel. The smoothing process itself is very consistent across the brand. Again, our results were consistent, proving that even though it's unexpected, the Amazon TVs are simply better than expected at smoothing out gradients.

Amazon Fire TV 4-Series with smoothing

Amazon Fire TV 4-Series with smoothing Amazon Fire TV Omni Series with smoothing

Amazon Fire TV Omni Series with smoothingThe new results confirm the quality of our evaluation. Test results are consistent within the same brand, and TVs with the same panel technologies and the same processor in the same company all have results within the margin of error. Some of these results are still surprising, but after going through service menus to confirm the processors used and comparing them with similar models, we're confident of our results.

Issues with our test

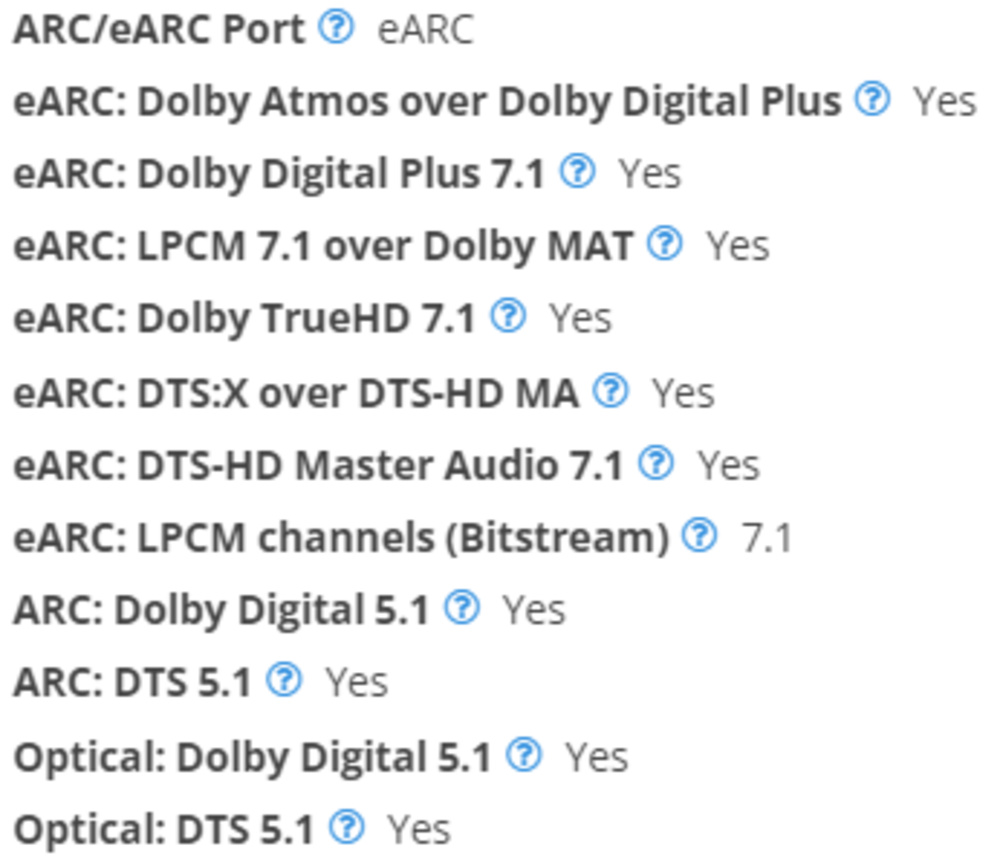

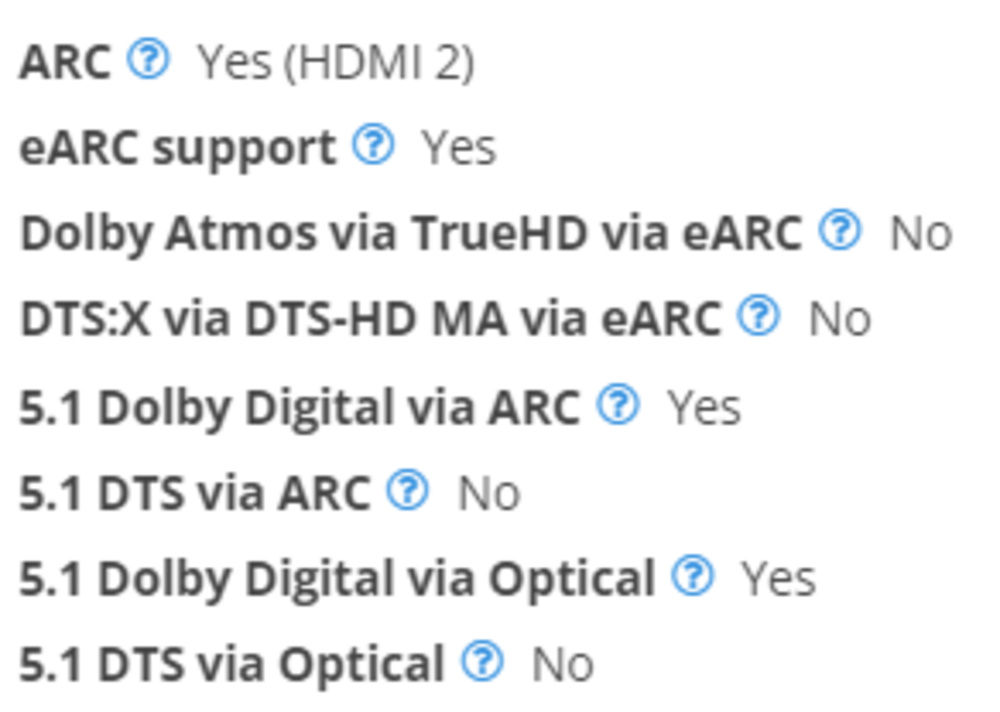

With this update, we wanted to completely revamp our audio testing, and ensure that it's presenting our readers with the full picture of what formats a TV supports. Except for our test coverage, these changes are mainly motivated by an abundance of caution rather than specific issues with our tests. We wanted to be sure that our tests present the true user experience and eliminate any possible external issues that could affect the validity of our results. There were three main areas we wanted to improve: clarity of our test results, improving our test coverage, and future-proofing our testing source.

So what was wrong with our test results, and why would we need to improve clarity? Take as an example the LG NANO75 2022 below on the left. It supports eARC but fails our Dolby Atmos via TrueHD via eARC test. The reason for this failure is unclear, though, and it's not obvious if it supports Atmos or not. Is it the Dolby Atmos metadata that it doesn't support, or does it lack the bandwidth or processing capabilities necessary to send an uncompressed audio format like TrueHD? With our new testing, we now split these tests, so each test is only testing for one specific thing. Now, look at the Sony X95K on the right. It's clear from the new Dolby Atmos over Dolby Digital Plus test that the TV supports Atmos metadata. It's also clear that it supports the bandwidth necessary for lossless Atmos since it supports Dolby TrueHD.

Our second goal with this update was to improve our test coverage. Although our older tests were good, they lacked a few key tests that people often asked us about. First, we didn't test LPCM, which was an issue for some devices, like the Nintendo Switch, which only supports LPCM for surround audio. Adding a test for LPCM (Bitstream) was fairly straightforward; we could use the Murideo's built-in speaker sweep test to test all channels at once without needing a custom file. Once we started testing this, we noticed that the results were very different from one TV to another. Many TVs can't pass all 7.1 channels when using LPCM, but they will all pass 2.0 LPCM just fine, so we want to expose that, as many sources that can only send LPCM only support two channels anyway.

This led us to change the test from a simple Yes/No to showing the number of channels supported, so you can see just how well the TV supports LPCM. We also encountered an issue with one TV, which didn't play the channels in the right order. The Murideo is configured to send the speaker sweep in the following layout: RRC_RLC_RR_RL_FC_LFE_FR_FL, but unfortunately, the order was lost on that specific TV. We also encountered an issue with the TCL 4 Series/S446 2021 during internal testing where it wouldn't play anything from the right channel.

We also repeat this test using a Dolby MAT container. It's done mainly for Apple TV 4k users, as some users have reported issues with their Apple TV only outputting Dolby MAT. We test this with LPCM audio, as that's what it seems like the Apple TV 4k is sending, but in this case, the test isn't really about the audio format; we just want to make sure that the TV can forward the Dolby MAT container properly without mixing up the channels.

We wanted to create similar test patterns for DTS and DTS-HD MA since the Murideo didn't have sweeps for those formats built-in. Unfortunately, even though we could generate an appropriate test file that worked with VLC, when we uploaded these files to the Murideo, it wasn't played back properly. We tried to work with Murideo's support team to help us solve this issue, but we couldn't fix it. It means we had to rely on the built-in files for these two tests, and these files send all channels at once, so unlike most of our other tests, we have to rely on the audio receiver to tell us if it's receiving 5.1 or 7.1 channels.

Second, we didn't test Dolby Digital Plus, a modernized version of Dolby Digital with Dolby Atmos metadata that's very popular with streaming services. This relatively new format has essentially replaced Dolby Digital, and it's extremely popular, so we needed to test for this. Even though this audio format is almost exclusively used by the native streaming apps on smart TVs, we also wanted to test this with our Murideo. There are a few reasons for this. TV apps can change and break, and we didn't want our test to be influenced or limited by the apps available. Unfortunately, this also means that the real-world experience with the native apps could be different from your own experience. It's arguably the biggest outstanding gap in our audio passthrough testing. Even if we did test specific apps on the TV, streaming services could decide to stop supporting Dolby Digital Plus tomorrow morning, and all of our results would be invalid, so we decided that the best course of action was to focus on the TV's capabilities rather than specific apps.

Finally, we removed the port that used to be listed next to the Yes/No on the ARC test. This information is already provided in our inputs section, where most users would expect to find it, and where it's the most useful. Knowing which port is the ARC/eARC port is mainly helpful for knowing if it'll interfere with your planned connection scheme. If you only have two ports that support HDMI 2.1 bandwidth, and one of those is also the eARC port, then you can't take full advantage of an Xbox Series S|X and a PS5 simultaneously. Providing this information in the audio passthrough section didn't make sense, as it's useless to users without knowing which ports are the high-bandwidth ones.

62 TVs Updated

We have retested popular models. The test results for the following models have been converted to the new testing methodology. However, the text might be inconsistent with the new results.

- Amazon Fire TV 4-Series

- Amazon Fire TV Omni QLED Series 2022

- Amazon Fire TV Omni Series

- Hisense A6H

- Hisense U6G

- Hisense U6GR

- Hisense U6H

- Hisense U7G

- Hisense U7H

- Hisense U8G

- Hisense U8H

- Insignia F50 QLED

- LG A1 OLED

- LG A2 OLED

- LG B2 OLED

- LG C1 OLED

- LG C2 OLED

- LG G2 OLED

- LG NANO75 2022

- LG NANO85 2021

- LG NANO90 2021

- LG QNED80 2022

- LG QNED90

- LG UP7000

- LG UQ8000

- LG UQ9000

- Samsung AU8000

- Samsung Q60B

- Samsung Q70A

- Samsung Q80B

- Samsung QN85A

- Samsung QN85B

- Samsung QN900B 8K

- Samsung QN90A

- Samsung QN90B

- Samsung QN95B

- Samsung S95B OLED

- Samsung The Frame 2022

- Samsung The Terrace

- Samsung TU7000

- Sony A80J OLED

- Sony A80K/A80CK OLED

- Sony A90J OLED

- Sony A90K OLED

- Sony A95K OLED

- Sony X80K/X80CK

- Sony X85K

- Sony X90J

- Sony X90K/X90CK

- Sony X95K

- TCL 4 Series/S455 2022

- TCL 6 Series/R646 2021 QLED

- TCL 6 Series/R648 2021 8k QLED

- TCL 6 Series/R655 2022 QLED

- TCL S546

- TCL S555

- Vizio M Series Quantum X 2022

- Vizio M6 Series Quantum 2021

- Vizio M6 Series Quantum 2022

- Vizio M7 Series Quantum 2021

- Vizio P Series Quantum 2021

- Vizio V Series 2022

LG NANO75 2022 - No Atmos support, but it's unclear why

LG NANO75 2022 - No Atmos support, but it's unclear why