See the previous 1.0 changelog.

See next 1.2 changelog.

Goal

With our 1.1 test bench update, we've added three new tests and made changes to several others intending to provide more relevant information to help you determine what keyboard is right for your needs.

We've received lots of great suggestions and requests about improvements we can make to how we test keyboards. One of the goals of this update is to address Hardware Customizability, which has been a frequent request from the community and our users. Our other two new tests provide dedicated test groups devoted to Macro Programming and Wireless Mobile Compatibility, which we previously addressed in a less focused way within other test groups. We've also modified the Computer Compatibility and Configuration Software test groups, removing several tests that were no longer relevant. Lastly, we've added objective tests to evaluate the noise keyboards make in our Typing Noise test.

Summary Of Changes

Below is a summary of the changes we've made in this update. More detailed information about the changes made to each test is available below this table.

| Test Group | Change |

|---|---|

| Hardware Customizability |

|

| Typing Noise |

|

| Macro Keys and Programming |

|

| Extra Features |

|

| Keyboard Compatibility |

|

| Wireless Mobile Compatibility |

|

| Software & Programming |

|

The goal of the test - why does it matter?

More people are interested in customizing certain hardware elements of their keyboards, and many keyboard manufacturers have begun incorporating more customizable elements in their designs. This test aims to provide information about whether a keyboard's hardware is designed to be customizable. It addresses various customization elements, including hot-swappable switches, switch stems, and stabilizers.

Creating our test - what we tried and what issues we encountered

The first decision we needed to make with this test was how to approach the concept of customizability. We considered two approaches. The first approach involved analyzing the extent a keyboard can or cannot be customized. The second approach involved analyzing customizability while considering the market availability of customizable parts. This second method adds risk elements because we can only account for some customizability options, and we can't anticipate market availability for parts in the future. For this reason, we chose the first approach but made some effort to account for the second, which we'll detail later.

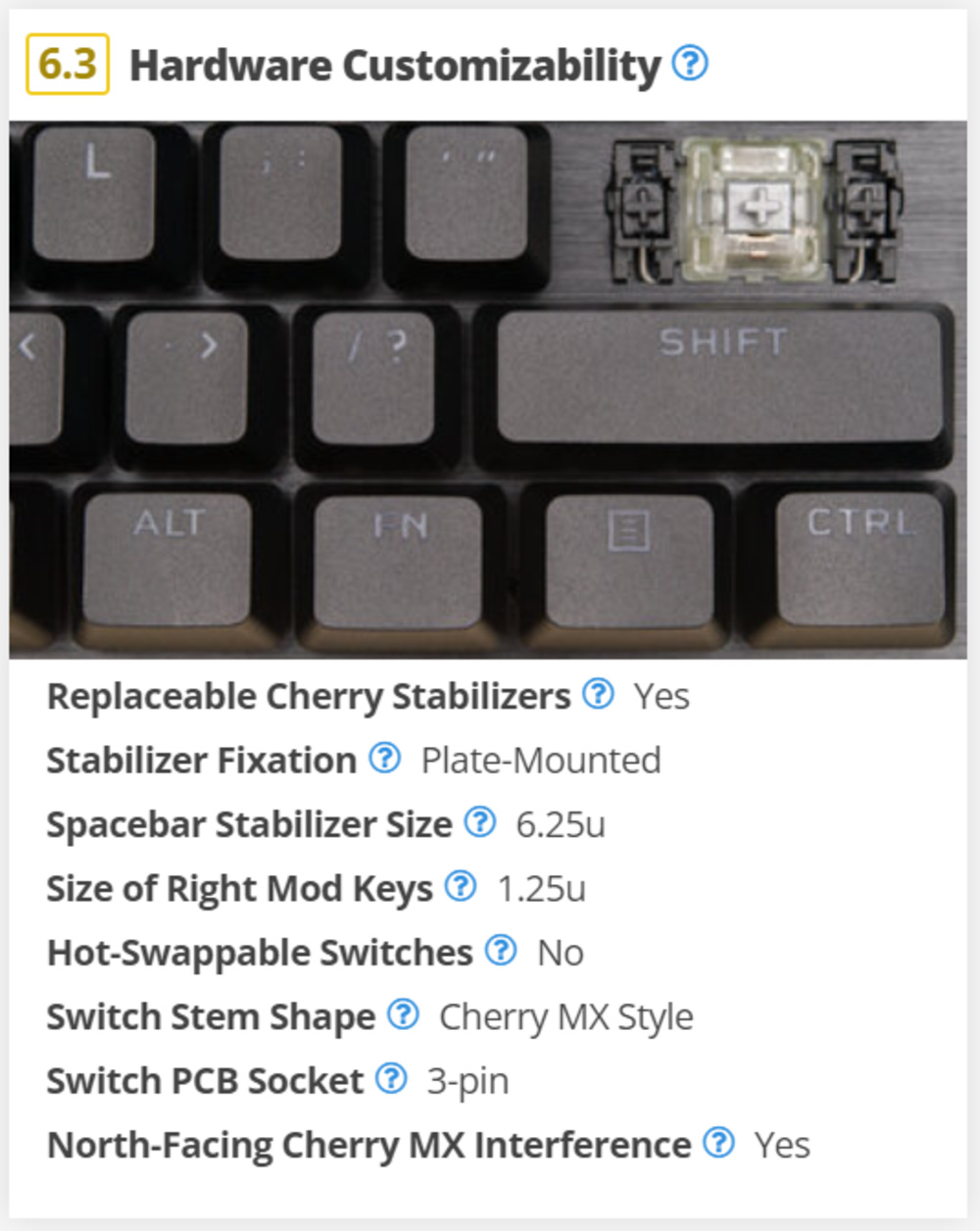

An example of the new Hardware Customizability box.

An example of the new Hardware Customizability box.After settling on our approach, we turned to determine which customization aspects are most important to most users. From reading threads online, as well as listening to feedback from our users, we decided the most important customization options are related to a keyboard's switches and stabilizers. Additional elements were considered, including the keycap profile and keyboard layout. However, these elements don't directly impact how customizable a keyboard is and are best handled in separate tests.

We're already planning a future test bench update to address keycaps in a dedicated category, so let us know if you have any suggestions in the discussions.

For switch customizability, we first focused on whether the switches were non-replaceable, soldered, or hot-swappable. Later we simplified this approach to focus on hot-swappable switches since most users can't easily customize other switches, soldered or otherwise. Additional focus is placed on evaluating the Switch Stem Shape, which is important because it dictates what keycaps are compatible with the switches.

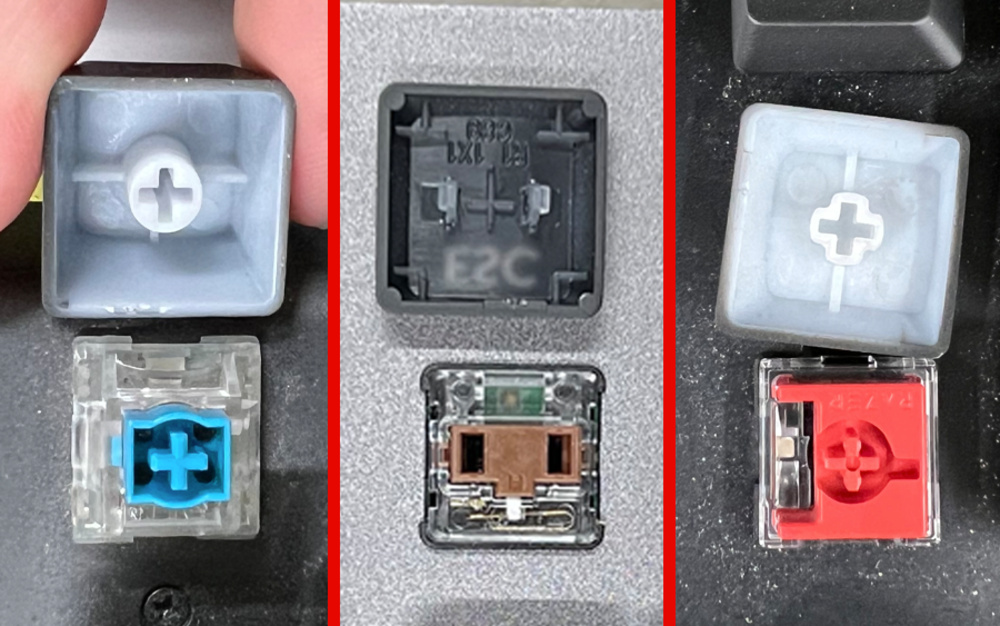

Examples of three different Stem Shapes. As you can tell, not all keycaps are compatible with all switches.

Examples of three different Stem Shapes. As you can tell, not all keycaps are compatible with all switches.For stabilizer customizability, our initial template accounted many options for stabilizer type. However, we found this created uncertainty when determining scores. Instead, we focused on whether a keyboard had Replaceable Cherry-type stabilizers. We chose this approach to ensure our test was objective because Cherry-type stabilizers are by far the most common stabilizer type on the market. For example, of the first 20 keyboards we've tested on Test Bench 1.1, 10 use Cherry Stabilizers, 8 aren't designed to be customizable, and 2 use a mix of Cherry along with something else. You can see every keyboard we've tested on Test Bench 1.1 and whether it has a replacement Cherry stabilizer on this table. The downside of this decision is that we may need to change the approach of this test if other stabilizer types become more popular in the future.

Finally, we also identified other important aspects for anyone interested in customizing their keyboards and added these as tests in this box. These things include the mounting type of the stabilizer, and the size standard of the stabilizer for both the spacebar as well as the right 'mod' keys. We also list the socket type of the keyboard's PCB, and whether or not the keyboard uses north-facing switches that could interfere with Cherry-profile keycaps. Overall we identified these as being the most important aspects for most people who are interested in customizing their keyboard; however, as always, if you feel we missed anything, let us know in the discussions.

A hot-swappable socket designed for 3-pin switches.

A hot-swappable socket designed for 3-pin switches. An example of a plate-mounted Cherry MX style stabilizer.

An example of a plate-mounted Cherry MX style stabilizer.In conclusion, this test group evaluates several aspects of a keyboard's customizability, primarily focusing on switches and stabilizers. These tests assess the most common customizability options and omit more in-depth options, like lubing and soldering. We're confident this test provides enough information for most people to evaluate whether a keyboard has the most desirable customizability options for their needs.

For scoring, we aimed to weigh the different tests according to how desirable they would be for most users. For this reason, Hot-swappable switches and Switch Stem Shape tests carry the highest scoring weight, and more common options like cherry-style switches and switch stem shapes score higher as they're the most common and, by extension, the most desirable.

Due to the nature of this kind of test, we likely can't please everyone. We expect there will likely be some users who prefer more in-depth information about customizability than what we provide. As new trends in keyboard customizability become more common, we'll evaluate the need to expand our test coverage.

Issues with our previous test

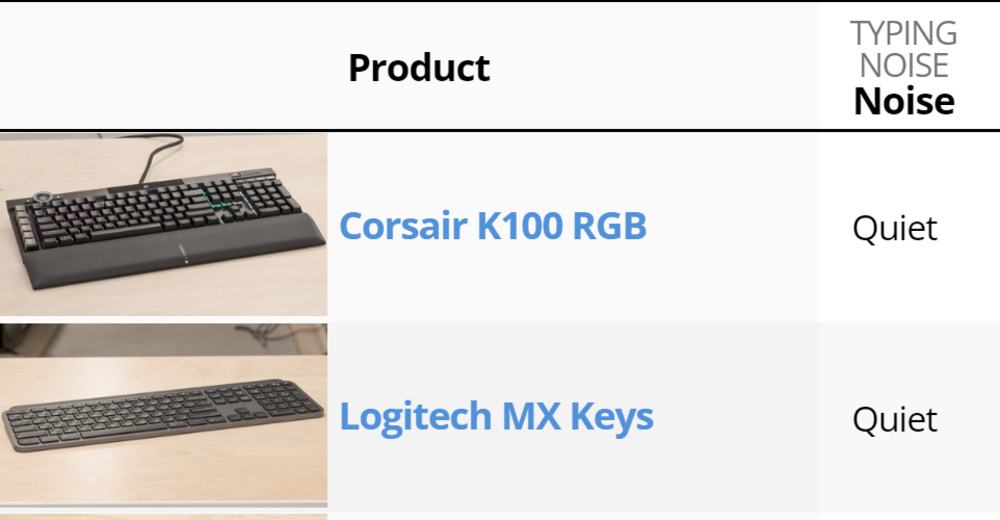

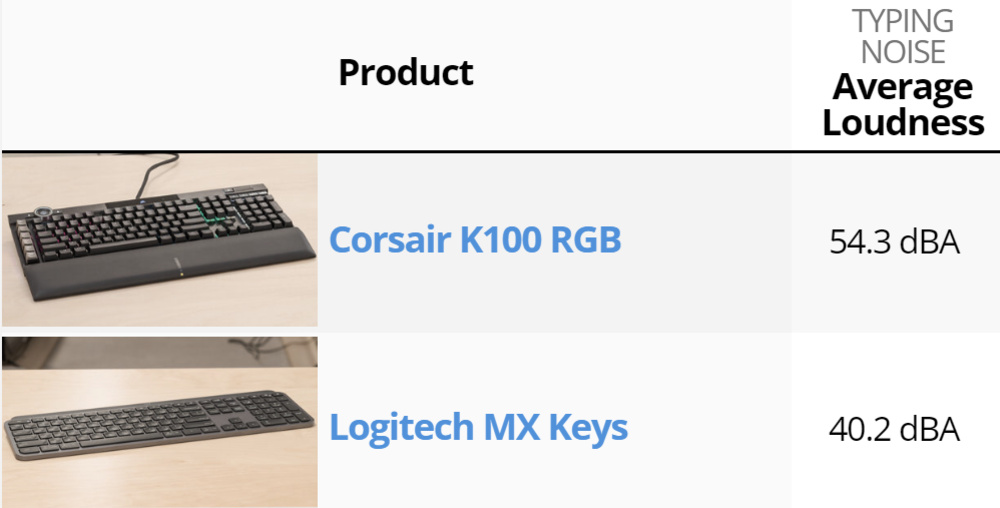

The previous Typing Noise test didn't objectively measure a keyboard's loudness, making it hard to get a granular enough idea of which keyboards were louder or quieter than one another. It consisted of only two elements: a video showing someone typing on the board and a subjective assignment of 'very quiet', 'quiet', or 'loud'.

While the video helped provide a good idea of what the typing experience sounded like, it made it difficult to compare the volume of different keyboards objectively. Meanwhile, because we lumped all volumes into three categories ('very quiet', 'quiet', or 'loud'), there were situations where two different keyboards that were assigned the same result could produce very different levels of typing noise. For example, in the screenshots below, you can see that the Logitech MX Keys has an average loudness of 40.2 dBA, while the Corsair K100 RGB has an average loudness of 54.3 dBA. However, on our old subjective test, they were both listed as being 'Quiet'.

On Test Bench 1.0, both keyboards were listed as 'Quiet'.

On Test Bench 1.0, both keyboards were listed as 'Quiet'. On Test Bench 1.1, the results are much more granular and give a much better representation of the volume difference.

On Test Bench 1.1, the results are much more granular and give a much better representation of the volume difference.Improving our test - what we tried and what issues we encountered

We've decided to keep the video aspect of our test that demonstrates the typing experience, but we've now added a dB reader in the frame that measures the sound in decibels and replaces our old subjective scores.

We considered using a machine or having a person press a specific key multiple times to provide our measurement. However, we felt this wouldn't be accurate as it isn't a realistic depiction of how people use their keyboards. For example, the speed, pressure, and angle you hit a key can affect the volume. Different-sized keys can also make different sounds at different volumes. Therefore we kept the previous way of testing, which involves a person typing actual sentences on a keyboard, mimicking real-world usage.

We express how loud a keyboard is using an average dBA value to give them all an objectively comparable figure and to help users have a relatable number to give an idea of how loud the keyboard is. However, we discovered in testing that this doesn't always relate to real-world usage. For example, keyboards that use 'clicky' switches tend to be much more distracting, even if they have the same dBA average as a keyboard with non-'clicky' switches. We experienced this in the office when comparing our Keychron S1 (with Low Profile Gateron Brown switches) to our Razer Ornata V3 (with 'clicky'-like membrane switches). Despite both keyboards having similar average dBA values, the Ornata is noticeably more distracting in a quiet office environment and appears louder to the human ear due to its higher-pitched sound. To account for this, we added a test called 'High Pitch Clicks' which results in either a 'Yes' or a 'No' and changes the overall 'Typing Noise' score accordingly. This is scored subjectively by the testers on keyboards with a noticeably distracting clicking sound. This way, you can tell if a keyboard will be more distracting, even if its average dBA may suggest otherwise. We also considered having a test that looked at only the top 5% values of a keyboard's recorded volume. However, we found that this still didn't line up with real-world subjective listening, and that considering the high pitched clicks was more accurate.

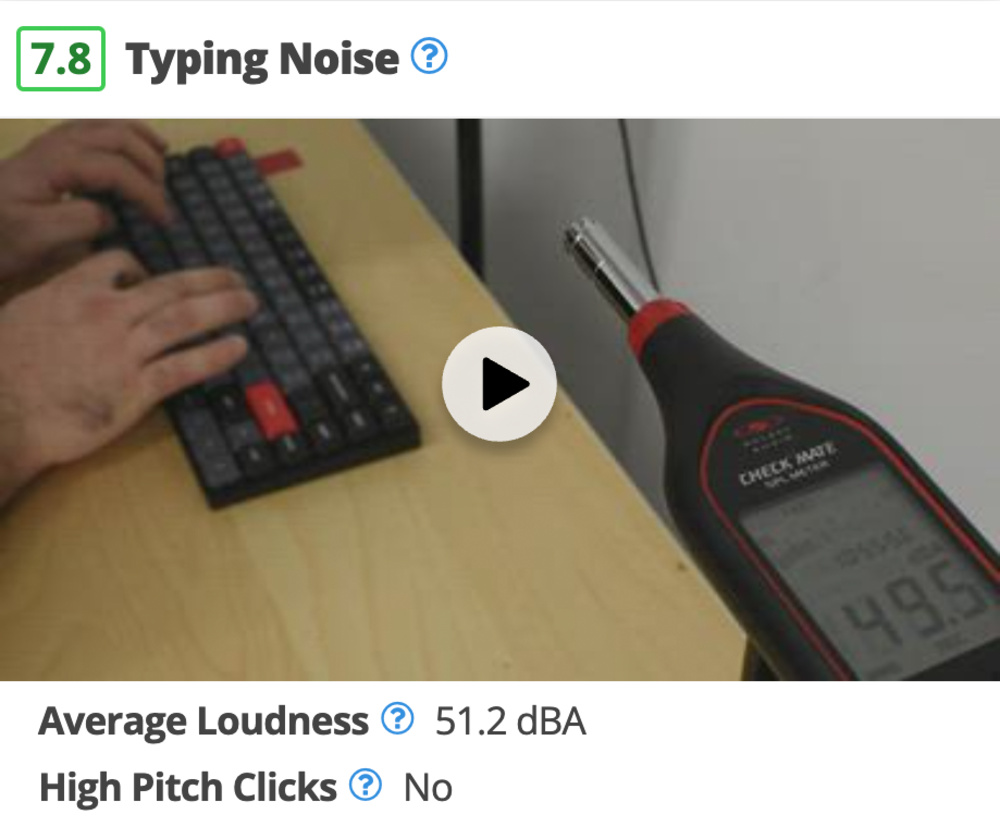

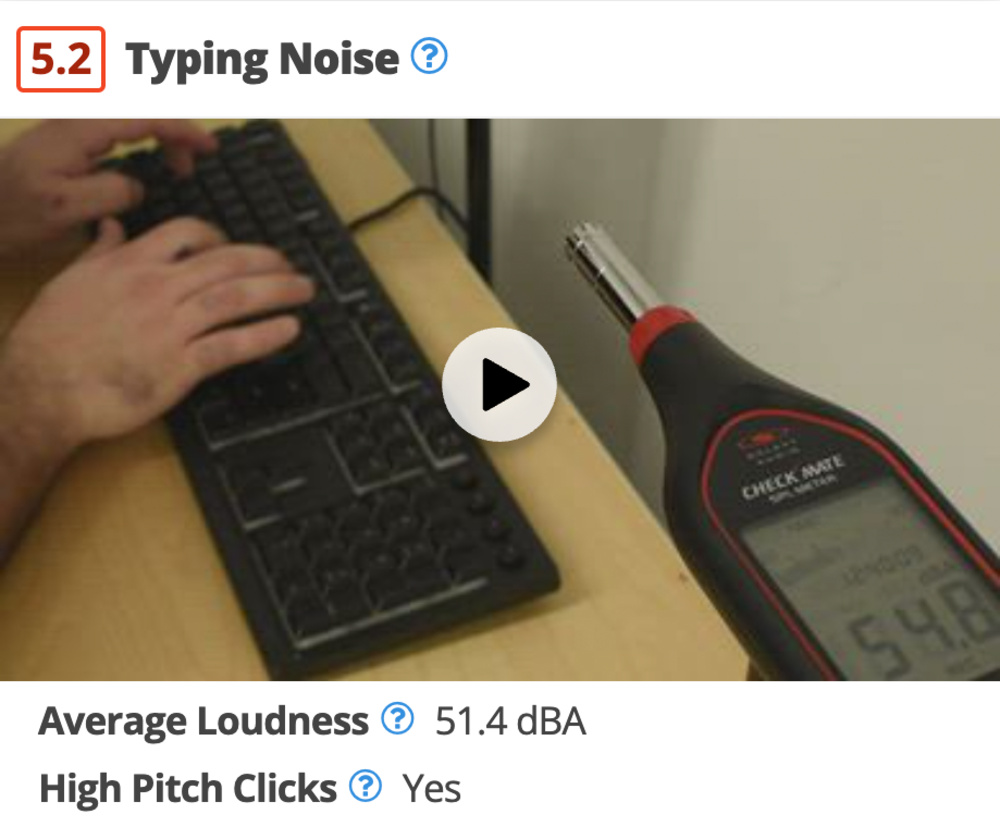

An image of the Typing Test with the dB reader visible in the frame.

An image of the Typing Test with the dB reader visible in the frame. The Keychron S1. Despite being almost the same average volume as the Razer Ornata V3, it appears much quieter and less distracting due to its lack of high-pitched clicks.

The Keychron S1. Despite being almost the same average volume as the Razer Ornata V3, it appears much quieter and less distracting due to its lack of high-pitched clicks. The Razer Ornata V3. Despite being almost the same average volume as the Keychron S1, it's much more distracting due to the high-pitched nature of its clicks.

The Razer Ornata V3. Despite being almost the same average volume as the Keychron S1, it's much more distracting due to the high-pitched nature of its clicks.Ultimately, this test doesn't try to determine how loud specific switch types are, but rather, the level of noise that the entire keyboard makes while you're typing on it, as many other factors can determine your keyboard's volume. Therefore two boards that use the exact same switches may be different volumes. Currently, we don't look into the volume difference of different aspects of a keyboard (like the switches, stabilizers, or O-Rings, for example), though it's possible we could look more into this in future updates.

Click here to see a table of all keyboards that we've tested on Test Bench 1.1, listed from loudest to quietest.

The goal of the test - why does it matter?

The goal of this test is to combine and simplify our macro programming tests. New tests provide information about the number of dedicated macro keys a keyboard has and whether they can be configured directly on the keyboard or the customization software. This information is important if you want dedicated macros keys on your keyboard for gaming or to improve your workflow.

Creating our test - what we tried and what issues we encountered

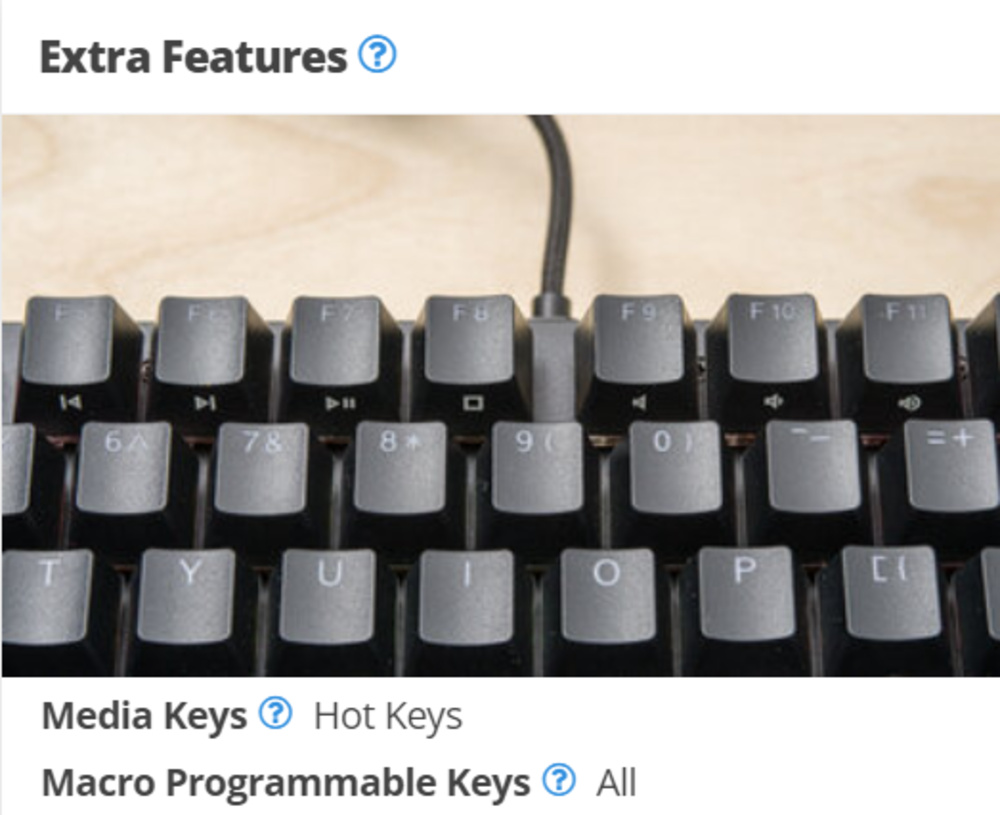

On the previous test bench, our reviews had two tests addressing macro programmability. The 'Macro Programmable Keys' in the Extra Features box aimed to evaluate whether all keys were macro programmable. While the 'Macro Programming' test in the Software & Programming box evaluated whether you could program macros using the software.

The old 'Macro Programmable Keys' test was part of the Extra Features box on Test Bench 1.0.

The old 'Macro Programmable Keys' test was part of the Extra Features box on Test Bench 1.0. The old 'Macro Programming' test was part of the Software & Programming box on Test Bench 1.0.

The old 'Macro Programming' test was part of the Software & Programming box on Test Bench 1.0.We chose not to keep our 'Macro Programmable Keys' test from the Extra Features box for two reasons. First, because it wasn't clear whether some or all keys were programmable using the software. Second, even if a keyboard doesn't support macros with its official software, you can always use third-party software (like the popular Pulover’s Macro Creator or Mini Mouse Macro, among others).

As for the 'Macro Programming' test from the Software & Programming box, we renamed it 'Macro Programming With Software' and moved it into the new Macro Keys And Programming box. We've also added a new 'Onboard Macro Programming' test, which evaluates whether you can program macros using the keyboard alone without using the software, like on the Ducky One 3. This is important if you prefer not to have to install extra software on your PC but still want to set macros on your keyboard.

The new Macro Keys and programming box on Test Bench 1.1.

The new Macro Keys and programming box on Test Bench 1.1.We also considered adding further tests to evaluate the depth and flexibility of macro programming. For example, some keyboards offer specific macro functions that automatically change when using specific software. However, features like this are rare and can vary from keyboard to keyboard, so we feel they're best described in the supporting text.

Issues with our test

Based on community feedback, we haven't received any requests to expand the coverage of our Extra Features test. However, we felt improvements could be made as this test has several instances of unclear test and test result wording. We also identified that the Macro Programmable Keys test was out of place, given our new Macro Keys and Programmability test group.

Improving our test - what we tried and what issues we encountered

We identified two instances of unclear language used in this test group: the name of our Wheel test and the name of the Hot Keys test result for the Media Keys test.

In the case of our Wheel test, on Test Bench 1.0, we only listed if a keyboard had a wheel but didn't specify what type of wheel. So if someone prefers a rotary knob type to change volume, then identifying only whether a keyboard has a wheel isn't ideal—for example, the Razer BlackWidow V4 Pro has a scroll wheel instead. Ultimately, we decided to try to split this test to describe the two commonly found hardware wheels for keyboards: Rotary knobs and Scroll Wheels.

An example of a Rotary Knob

An example of a Rotary Knob An example of a Scroll Wheel

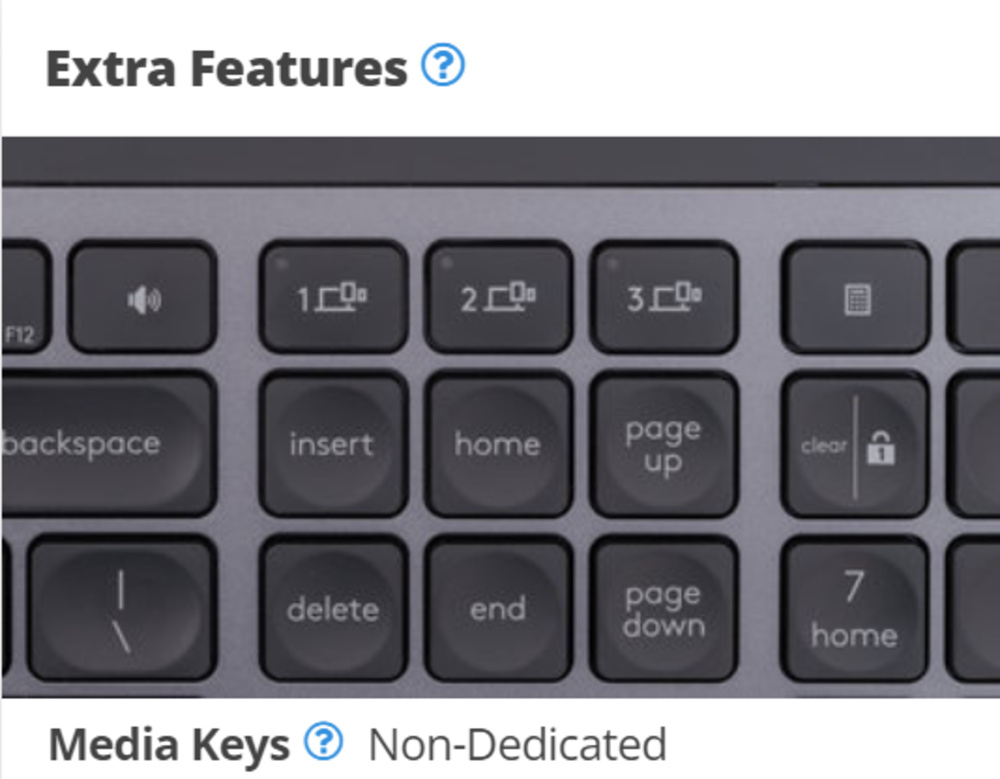

An example of a Scroll WheelFor our 'Media Keys' test, we used the result of 'Hot Keys' whether the keyboard had a single dedicated key or if you had to press a combination of keys to activate it. For example, some keyboards have dedicated buttons along the top that allow you to change tracks or volume; however, others (like the Logitech MX Keys) require you to hold an Fn key and hit one of the F keys to skip tracks or change the volume. On Test Bench 1.1, we now use the term 'Non-Dedicated', as it's more clear that it's not a single key but requires a combination of keys to access these features.

The Logitech MX Keys on Test Bench 1.0

The Logitech MX Keys on Test Bench 1.0 The Logitech MX Keys on Test Bench 1.1

The Logitech MX Keys on Test Bench 1.1We also removed the Macro Programmable Keys test from the Extra Features section. We've outlined why we've removed this test in the details of the Macro Keys and Programming section above.

Issues with our test

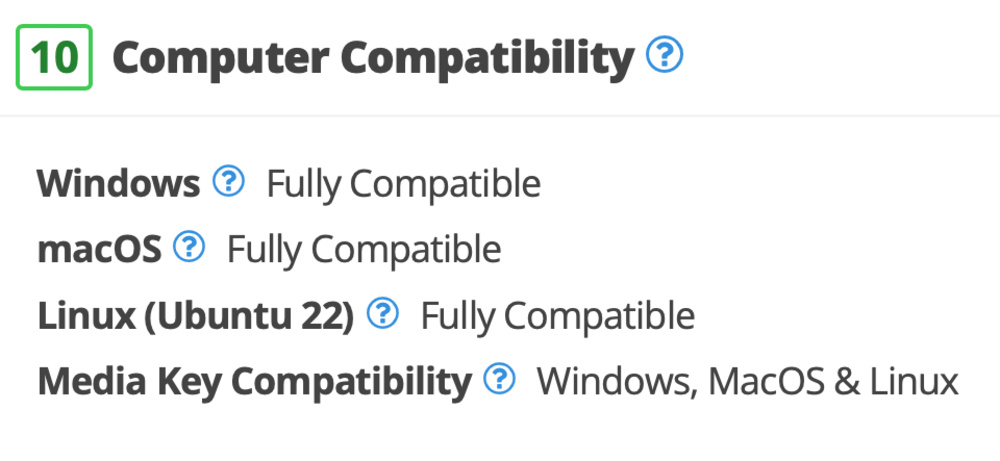

Our old Keyboard Compatability test evaluated both computer and mobile compatibility together. This was problematic if you were only interested in either computer or mobile compatibility because it only presented a single score. We also noted some inconsistencies with this test in how we determined whether a keyboard was fully or partially compatible with certain operating systems.

The old Keyboard Compatibility box for the Glorious GMMK 2. Because all Operating Systems were listed, the score wasn't helpful if you only care about mobile or PC use. The screenshots down below show the new boxes for the same keyboard.

The old Keyboard Compatibility box for the Glorious GMMK 2. Because all Operating Systems were listed, the score wasn't helpful if you only care about mobile or PC use. The screenshots down below show the new boxes for the same keyboard.Improving our test - what we tried and what issues we encountered

Early on, we realized that accounting for every feature and compatibility issue isn't possible and could jeopardize the test score if something we didn't account for wasn't compatible. Also, listing every potential compatibility issue would create a long list of tests that would be confusing to understand.

We considered two solutions. The first method would evaluate the overall compatibility and have individual tests for essential features, including alphanumeric keys, Fn key functions, and dedicated media keys. While this solution would allow us to stop using the ambiguous 'Partially' compatible test result, it could create a situation where a keyboard would score a perfect ten even if some non-essential features of the keyboard weren't compatible. Additionally, in the case where most FN functions are compatible but some are not, this would again result in a 'Partially' compatible result.

The second solution was to keep the same test format we currently have but separate computer compatibility from mobile compatibility. While this doesn't solve the issue of 'Partial' compatibility, it provides a better focus for this test.

Ultimately, we decided on a hybrid of both solutions that we considered. We chose to separate computer compatibility from mobile, though our score is still based on whether a keyboard is 'fully,' 'partially,' or 'not compatible' with a specific OS. However, based on all the keyboards we've tested and the compatibility issues we have encountered, our methodology is now better defined with clear distinctions between these results, resulting in better consistency in our reviews. An additional advantage of splitting our computer compatibility from mobile compatibility tests is that we can now add more detailed text to address computer compatibility alone without needing to address mobile compatibility in the same area. We also added a separate test specifically for the media control keys on a keyboard, so you can easily see which operating system(s) the keys are compatible with. You can see a table of Computer Compatibility for all keyboards tested on our 1.1 Test Bench here.

The new Computer Compatibility box for the Glorious GMMK 2. If you only plan on using this keyboard with a computer, the score of 10 is much more accurate than the previous Compatibility score of 7.2.

The new Computer Compatibility box for the Glorious GMMK 2. If you only plan on using this keyboard with a computer, the score of 10 is much more accurate than the previous Compatibility score of 7.2. The new Wireless Mobile Compatibility box for the Glorious GMMK 2. As compared to the old score of 7.2, it's now much clearer than if you want this keyboard for mobile use, it isn't compatible with any device.

The new Wireless Mobile Compatibility box for the Glorious GMMK 2. As compared to the old score of 7.2, it's now much clearer than if you want this keyboard for mobile use, it isn't compatible with any device.The goal of the test - why does it matter?

We've received feedback from the community showing us that many people are looking for a keyboard to use on the go with their mobile devices. Previously, we evaluated and scored mobile compatibility alongside computer compatibility. This created problems if you were only interested in either computer or mobile compatibility but only had a single score to reference. We also identified some inconsistencies in how we determined when a keyboard was fully or only partially compatible with an operating system.

Creating our test - what we tried and what issues we encountered

We approached this test the same way we approached the Computer Compatability test above. We acknowledged that we couldn't account for all compatibility features but should focus on the compatibility of the most popular mobile operating systems with essential elements of the keyboard, including alphanumeric keys and FN key functions. We also added a new test that evaluates whether a keyboard's media keys are compatible with the most popular mobile operating systems.

We're confident our internal methodology is now better defined to make more consistent decisions about what constitutes full or partial compatibility based on the number of keyboards we've tested and some of the common compatibility issues we've encountered. You can see a table of wireless mobile device compatibility on our 1.1 Test Bench here.

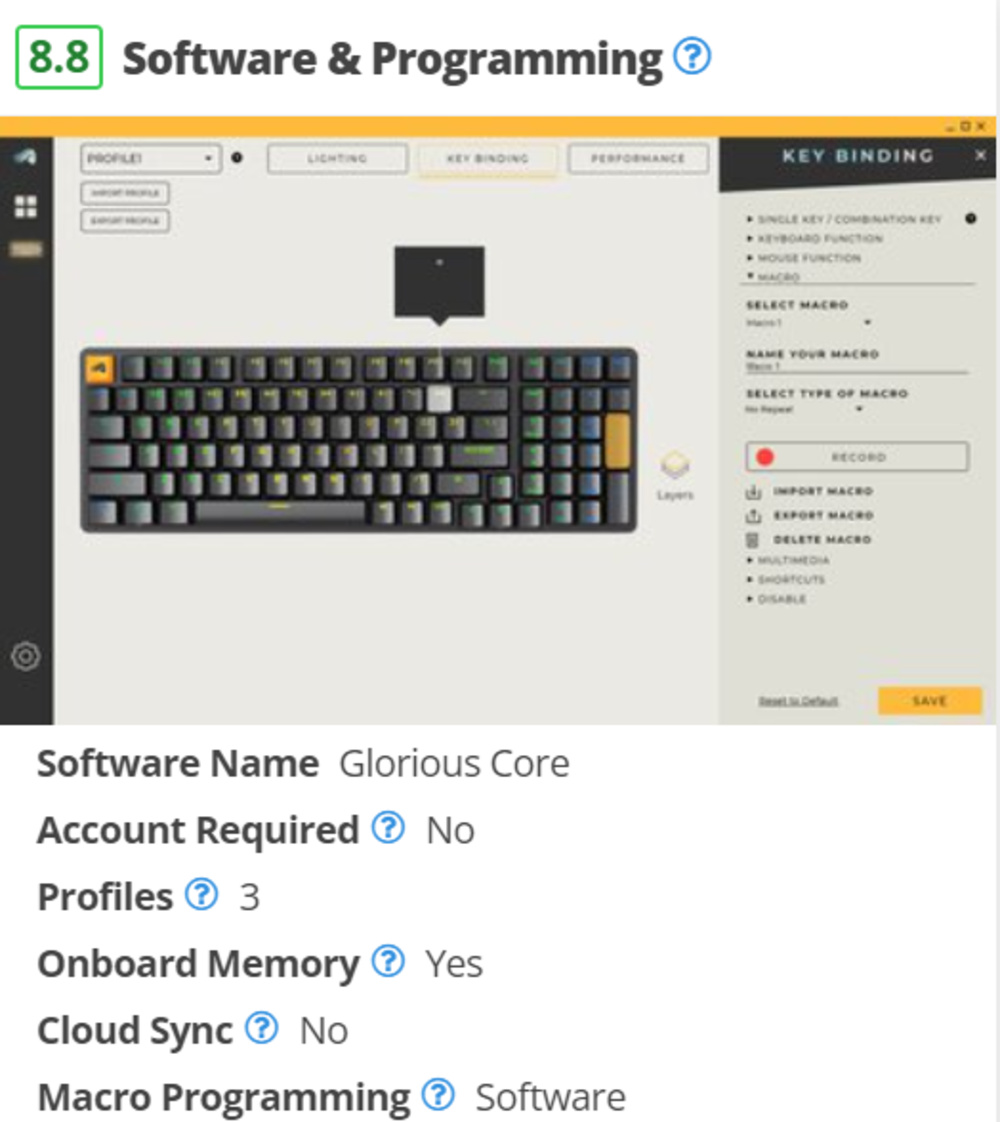

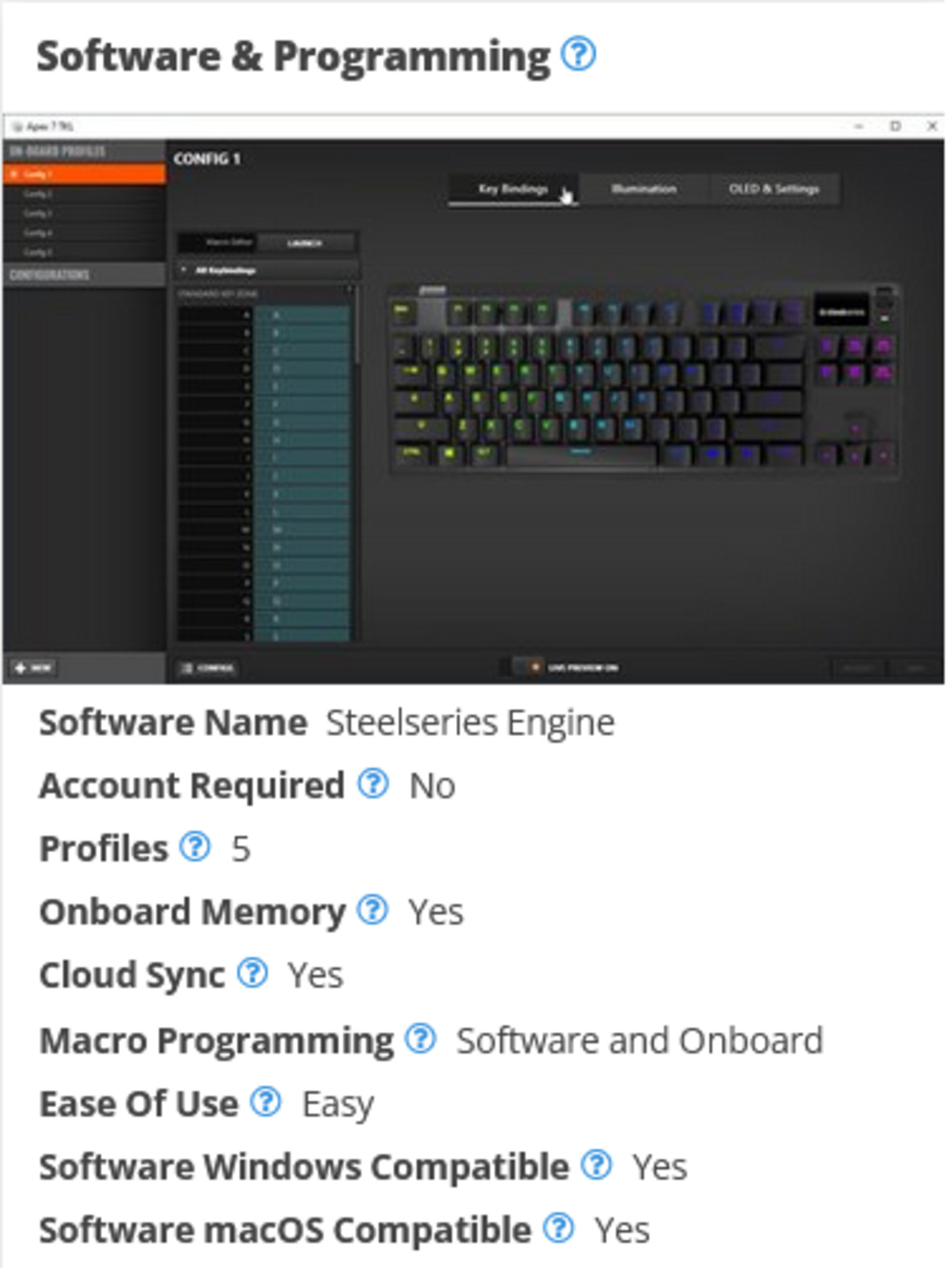

Issues with our test

We highlighted several different issues with our Configuration Software test. Firstly, we wanted to evaluate how this test group was scored because our scores didn't align with people's experiences using their keyboard software. We also felt it was important to do a better job of visually presenting a keyboard's software interface and its options. Additionally, we noted several tests in this group were no longer relevant in the context of the wider keyboard market or warranted removal because they don't play an important role in the decision to buy a keyboard that suits your needs. Lastly, we identified that our Macro Programming test was out of place, given the creation of our new Macro Keys And Programmability test.

Improving our test - what we tried and what issues we encountered

Based on user feedback we've received, it's clear that the number of features a given software has doesn't translate into how good or helpful it is to users. For this reason, we decided to remove the score from this test group.

Previously this test relied on a single image to give a visual impression of what the software looked like and how it operated. We decided to replace this image with a video that browses through each section of the software and does a much better job of illustrating how the software is laid out and the depth of features it offers.

After deciding to remove our overall score and add a video element that explores the software in-depth, we turned to identify tests that were no longer relevant. We singled out the Account Required, Cloud Storage, and Ease of Use tests for removal. In the case of the Account Required test, we've noted that very few software options require you to create an account to use them at this point, and when this issue does pop up, we'll address it in our text. Regarding Cloud Storage, this feature is widespread on keyboards from major manufacturers but uncommon for smaller manufacturers. In either case, we've observed that whether or not the software has this feature doesn't factor heavily into a buying decision—if you care about this and want to see it in our reviews, please let us know in the discussions. Furthermore, onboard storage is the simpler and more flexible alternative that is more important to more people. Ease of Use is the last test we decided to remove because we're confident our new video element more organically demonstrates how the software is laid out and how easy it is for you to use.

Finally, we decided to remove the Macro Programming test from this test group and move it into the newly-created Macro Keys and Programming test group, which focuses on addressing macro programmability aspects.

The old Software & Programming box for the SteelSeries Apex 7 TKL.

The old Software & Programming box for the SteelSeries Apex 7 TKL. The new Configuration Software box for the SteelSeries Apex 7 TKL, including a video

The new Configuration Software box for the SteelSeries Apex 7 TKL, including a video

Conclusion

We're always interested in any comments or questions you have about this or any future updates. Feel free to reach out to us in the discussions. If you're interested in seeing all the keyboards we've tested on Test Bench 1.1 to date, check out this table.

105 Keyboards Updated

We have retested popular models. The test results for the following models have been converted to the new testing methodology. However, the text might be inconsistent with the new results.

- Apple Magic Keyboard 2017

- Apple Magic Keyboard for iPad 2021

- Apple Magic Keyboard with Touch ID and Numeric Keypad

- ASUS ROG Falchion

- ASUS ROG Strix Flare II Animate

- Corsair K100 AIR

- Corsair K100 RGB

- Corsair K55 RGB

- Corsair K55 RGB PRO XT

- Corsair K60 RGB PRO Low Profile

- Corsair K65 RGB MINI

- Corsair K70 PRO MINI WIRELESS

- Corsair K70 RGB MK.2

- Corsair K70 RGB TKL

- Corsair K83 Wireless

- Corsair K95 RGB PLATINUM XT

- Drop CTRL

- Ducky One 2 Mini V1

- Ducky One 3

- Ducky Shine 7

- Dygma Raise

- ErgoDox EZ

- EVGA Z12

- EVGA Z15

- EVGA Z20

- GLORIOUS GMMK

- GLORIOUS GMMK 2

- GLORIOUS GMMK PRO

- HyperX Alloy Core RGB

- HyperX Alloy Elite 2

- HyperX Alloy FPS RGB

- HyperX Alloy Origins 60

- IBM Model M

- iClever Tri-Folding Keyboard BK08

- IQUNIX F97

- Keychron C2

- Keychron K10

- Keychron K3 (Version 2)

- Keychron K8 Pro [K2 Pro, K3 Pro, K4 Pro, etc.]

- Keychron Q6

- Keychron S Series

- Keychron V Series

- Kinesis Freestyle Edge RGB

- Leopold FC900R

- Logitech Combo Touch

- Logitech ERGO K860

- Logitech G PRO Keyboard

- Logitech G PRO X Keyboard

- Logitech G213 Prodigy

- Logitech G413 SE

- Logitech G512 Special Edition

- Logitech G513

- Logitech G613 LIGHTSPEED

- Logitech G715

- Logitech G815 LIGHTSYNC RGB

- Logitech G910 Orion Spark

- Logitech G915 LIGHTSPEED

- Logitech K380

- Logitech K400 Plus

- Logitech K585

- Logitech K780

- Logitech K845

- Logitech MX Keys

- Logitech MX Mechanical

- Logitech Signature K650

- Microsoft Bluetooth Keyboard

- Microsoft Sculpt Ergonomic Keyboard

- Microsoft Surface Ergonomic Keyboard

- Microsoft Surface Keyboard

- Mountain Everest Max

- NuPhy Air75

- NZXT Function

- Obinslab Anne Pro 2

- Razer BlackWidow

- Razer BlackWidow Elite

- Razer BlackWidow V3

- Razer BlackWidow V3 Pro

- Razer BlackWidow V4 Pro

- Razer Cynosa V2

- Razer DeathStalker V2 Pro

- Razer Huntsman

- Razer Huntsman Elite

- Razer Huntsman Mini Analog

- Razer Huntsman Tournament Edition

- Razer Huntsman V2

- Razer Huntsman V2 Analog

- Razer Ornata Chroma

- Razer Ornata V2

- Razer Ornata V3

- Razer Pro Type Ultra

- Redragon K552 KUMARA RGB

- ROCCAT Pyro

- ROCCAT Vulcan II Max/Mini

- ROYAL KLUDGE RK61

- SteelSeries Apex 3

- SteelSeries Apex 5

- SteelSeries Apex 7 TKL

- SteelSeries Apex 9

- SteelSeries Apex Pro

- SteelSeries Apex Pro Mini Wireless

- SteelSeries Apex Pro TKL (2023)

- Varmilo VA87M

- Wooting two HE

- ZAGG Pro Keys

- ZSA Moonlander